DSI 3500 / (RAMSAN-500) Solid State Storage to the test

Flash storage was one of several products Gartner listed as among "the most important technologies in your data center future." So … time to publish about a great device we have in the performance Center, for our customers to test their high-end solutions on; a 1 Terabyte DSI3500 with the full 8x 4Gbit fibers hooked up an 96 core ES7000 Enterprise Server with 512G RAM and 16 Dual port Emulex LPe12002-M8 and LPE 11002-M4 HBA’s, Windows 2008R2 Datacenter and SQL 2008 R2 (November CTP). Let’s put it to the test and see why!

On the DSI website (http://www.dynamicsolutions.com/sites/default/files/DSI3500.pdf) is stated:

“ the DSI3500 blends two different solid state technologies, RAM and Flash, to create the industry’s first Cached Flash system. A loaded system consists of 2TB of usable RAID protected Flash capacity and up to 64 GB of DDR RAM cache. The DSI3500 takes advantage of this large DDR RAM cache to isolate the Flash memory from frequently written data and to allow for massive parallelization of accesses to the back end Flash. By using a large DDR RAM cache, sophisticated controller, and a large number of Flash chips, the DSI3500 is able to leverage the read performance, density, and sequential write performance of Flash. The unique advantage of the DSI3500 is its tight integration of high capacity Flash storage and the large high-speed DDR RAM cache. The 16-64 GB RAM cache provides a sustained dataflow (2-GB/sec) between Flash storage and the SAN Enterprise Servers. Its 2-8 Fibre Channel ports provide true access to exceptional bandwidth and IOPS capability.”

So …let’s see it we can squeeze 100K IOPS and 2 GByte/sec out of it … ;-)

Installation and configuration

Installation is a straight forward process, below the steps to configure the Windows MPIO, the DSI to expose some LUNS and a Windows mointpoint.

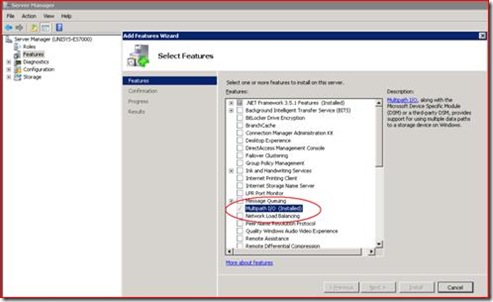

1) Plug in a fiber, network cable and 2 power cords into the DSI and a ctivate the Windows Multipath I/O (MPIO) feature:

ctivate the Windows Multipath I/O (MPIO) feature:

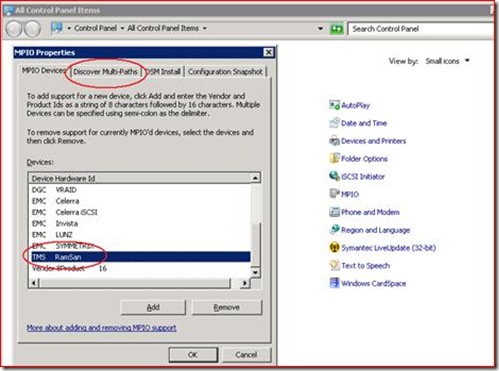

2) In the Windows Control Panel, Select the MPIO icon, the tab “Discover Multi-Paths” and select TMS RamSan, Press “Add button” and after a reboot It will show up in the MPIO device list:

3) Hook up all 8 fiber cables.

4) Configure the DSI:

– Use either a DHCP address or assign one manually through the front panel menu and start a web browser to the address;

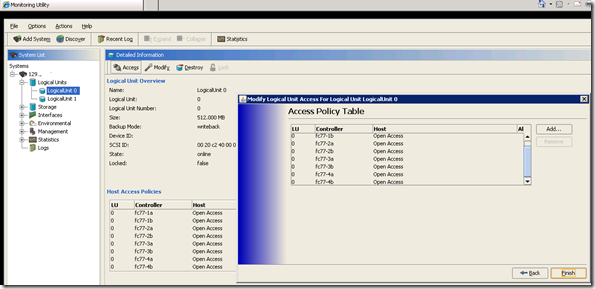

Configure 1 or more LUNs, I configured 2 LUNs of both 512000 MB and set the access policy to have “Open Access” on all 8 fiber controller paths.

With 8 shared fiber paths to the LUNs this will allow maximum throughput.

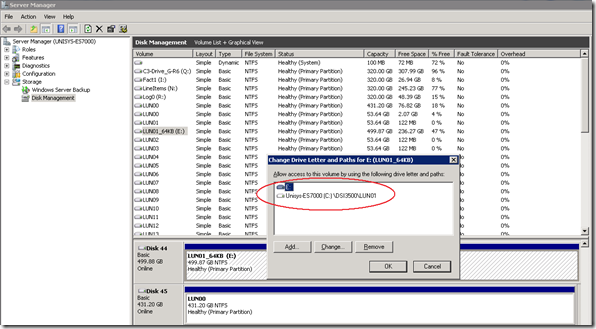

5) Create a Windows Mount point to each of the LUNS and format the LUN with 64 KB NTFS format . And that’s it!

Within 30 minutes the whole installation is complete and ready to go.

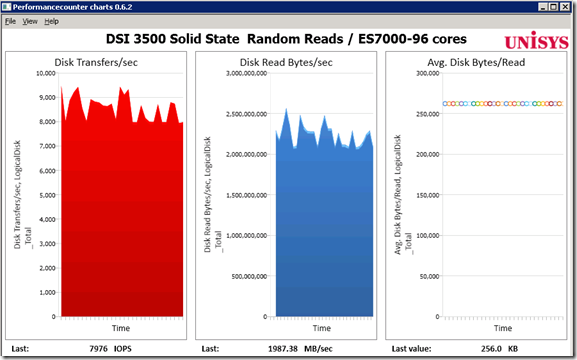

Test 1a): Testing the Maximum throughput with SQLIO for 256 KB Random Reads on a 10 GByte file.

a great and simple utility to check out the IO metrics of a storage subsystem is the SQLIO utility. Typically there are 2 things to check first:

the maximum number of IO’s that can be processed (small 8 KB IOs) and the maximum throughput (MBytes/sec with larger 256 KB or more blocks).

By default SQLIO will allocate only a small file of 256 MB to test with, which results typically in all cache hits without touching the disks or SAN backend so

we will specify to test with a larger 10 GByte file.

(SQLIO is downloadable here: http://www.microsoft.com/downloads/details.aspx?familyid=9a8b005b-84e4-4f24-8d65-cb53442d9e19&displaylang=en)

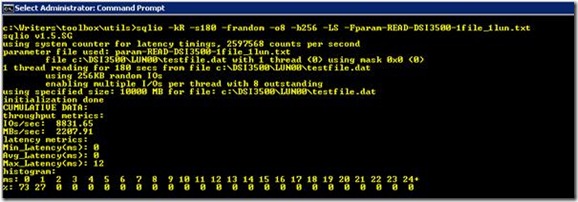

Command: sqlio -kR -s180 -frandom -o8 -b256 -LS -Fparam-READ-DSI3500-1file_1lun.txt

Content of parameter file:

c:\DSI3500\LUN00\testfile.dat 1 0x0 10000

Result: Random 256 KB reads from a single 10 GB file: 2207.01 MB/sec! and an average latency of “zero” milliseconds as reported by sqlio : 72% of the IOs completed in 0 msec and 27% of the IOs completed in 1 msec.

So with just one file and 1 thread reading we are already reading more data than what is specified by DSI (2GB/sec) .

This throughput is already amazing, but by doing some quick math’s : if we have a total of 8 fiber paths, with a theoretical maximum of 8x 4Gbit/sec, which is close to 3200 MB/sec!

The most obvious reasons to get the random read throughput up is to add more threads to read from the same file or specify more files to read from in parallel.

SQLIO supports both tests by making some changes to the parameter file:

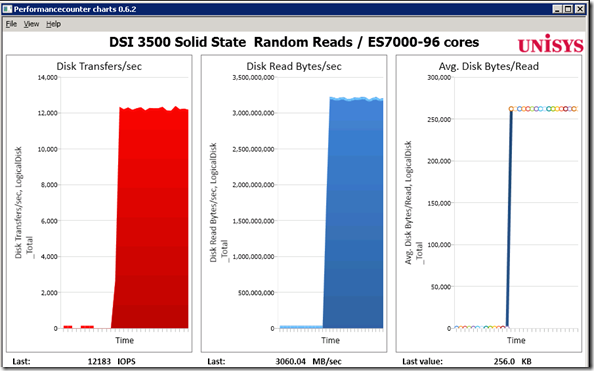

Test 1b): Testing the Maximum throughput with SQLIO for 256 KB Random Reads on a 10 GByte file with 2 threads.

With two threads reading random IOs the throughput goes up to an average of 2992 MB/sec, that’s an additional 784 MB/sec extra throughput!.

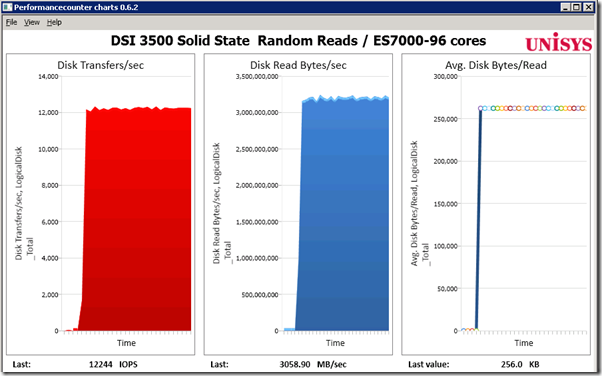

Test1c): Reading random 256KB IOs from 2x 10 GB files, single threaded each.

So adding a thread works great to get more throughput. So very likely the 2nd option, adding a second file, with also it’s own thread will also ramps up the throughput.

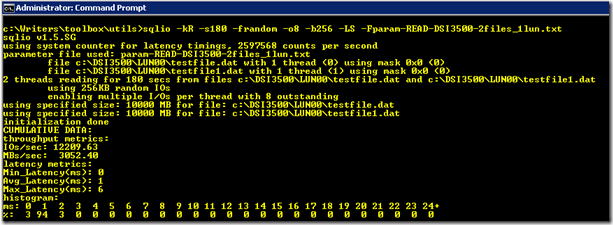

Command: sqlio -kR -s180 -frandom -o8 -b256 -LS -Fparam-READ-DSI3500-2files_1lun.txt

Content of parameter file:

c:\DSI3500\LUN00\testfile.dat 1 0x0 10000

c:\DSI3500\LUN00\testfile1.dat 1 0x0 10000

The throughput reading from 2 files in parallel increases to an average of 3052 MB/sec, which is an 844 MB/sec increase compared to single threaded single file access.

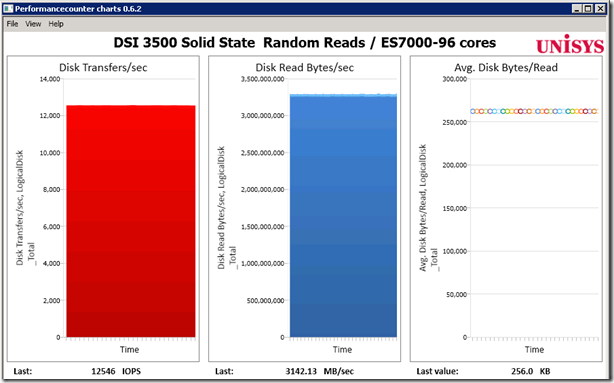

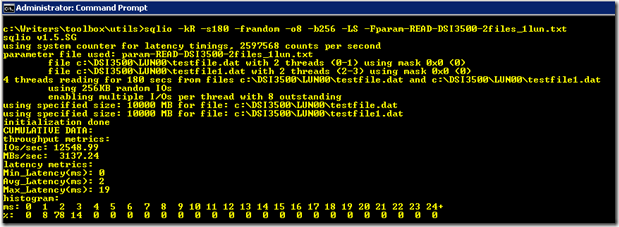

Test1d): Reading random 256KB IOs from 2x 10 GB files, two threads each.

With 2 files reading with 2 threads each, both the Disk transfers/sec and Disk Read Bytes/sec show flat lines !

that tells you that some limit is reached : the throughput peaks at 3142 MB/sec, which is the 8x 4 Gbit fiber throughput limit!

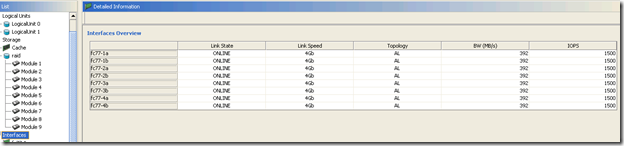

When checking the throughput on the fiber interface level, all 8 fiber interfaces are at 392 MB/sec Bandwidth each.

These quick tests with SQLIO show that we can get sustained a maximum throughput of 3 GByte/sec !

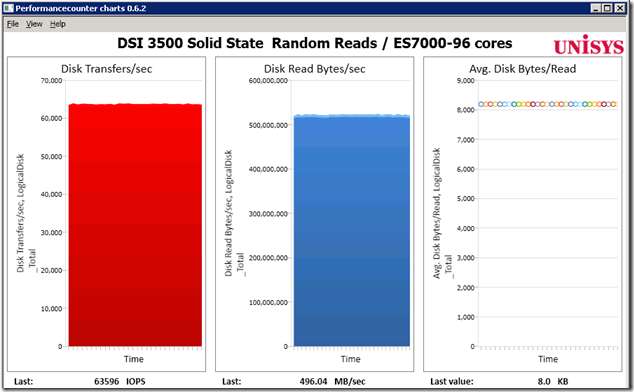

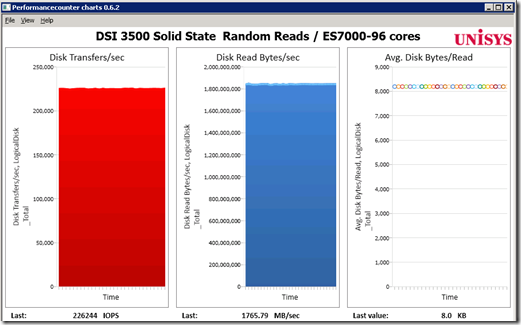

Test1e): Maximum number of Random 8 KB IOs.

The second part to check out is how many IO’s per second (IOPS) can be serviced.

SQL Server works with 8 KB pages and 64 KB extends and up to 4 MByte Read IOs during backup.

So lets check how many IOPS we can get with 8 KByte IOs.

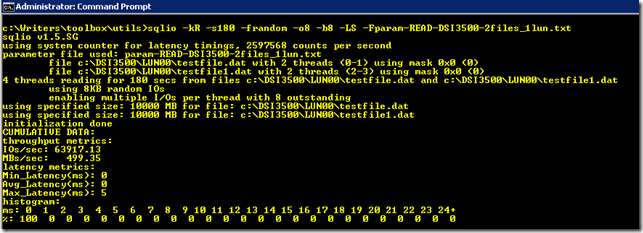

With 2 files/2threads : we a getting 63917 Random 8KB Reads.

The flat lines in the graph below show that there’s some sort of bottleneck .

So the fastest way to find out if this is a Windows OS / file system limit or a hardware limit is to simply increase the number of files in this case also:

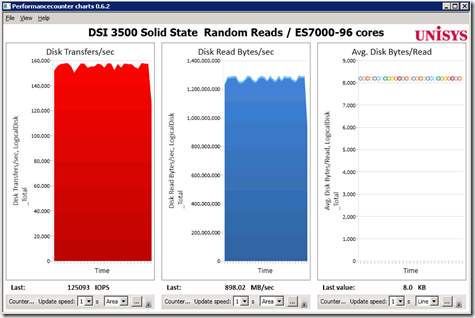

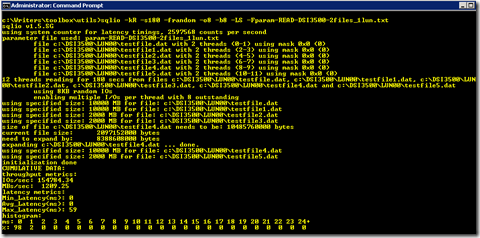

By specifying 6 files/ 2 threads each, the IOPS almost doubles, from 63917 to over 125000 !

So let’s increasing the number of parallel files even further ; with 10 files the number of IOs that we can squeeze out and service with a single Windows LUN

is more than 225000 IOPS! We have 8 fibers connected, so each is servicing (225000 / 8 = ) 28125 IOPS per HBA which isn’t near a hardware bottleneck.

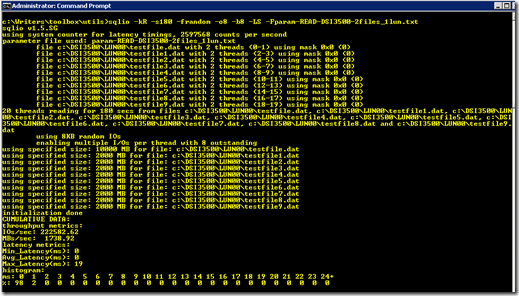

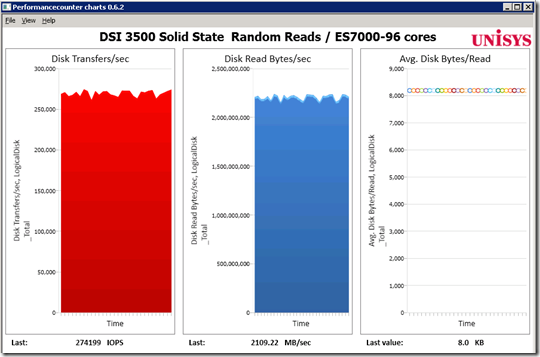

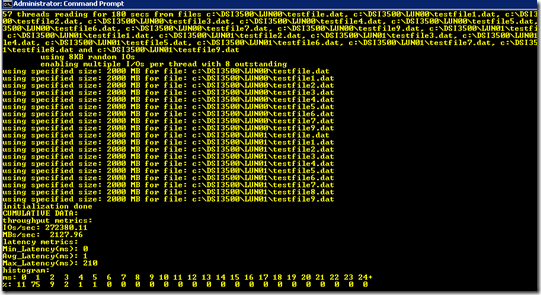

So, let’s add the second LUN and read from multiple files that are spread accross both luns.

With multiple files spread over 2 LUNs the IO throughput increases to, on average, over 272000 and 86% of all IOs are still serviced within 1 millisecond!

This is way more than the 100000 IOPS that DSI lists on the specsheet.

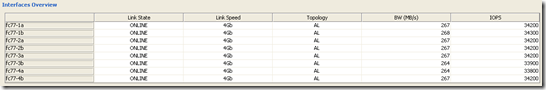

The fiber interfaces are processing over 34000 IOPS each:

Conclusion: the DSI 3500 specifications are very modest and can be achieved without special tricks. They can also be outperformed significantly by using 2 LUNS and accessing multiple files in parallel, so up to the next test and see how hard we can drive SQL Server !

loading...

loading...

One Response Leave a comment