Scenario:

Imagine you have a single table with 400 Million rows and 46 GByte in size that you want to read as fast as possible. How can you check if you run @ top speed?

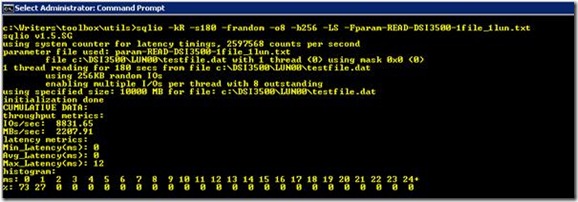

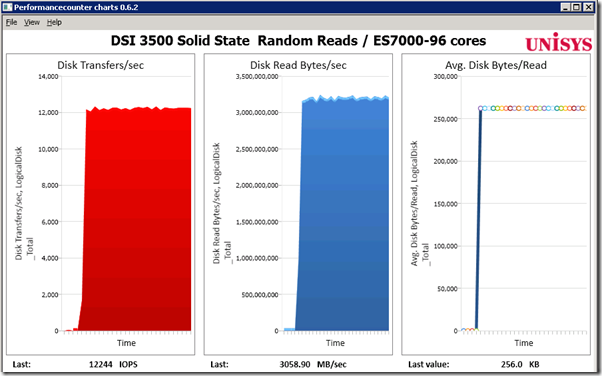

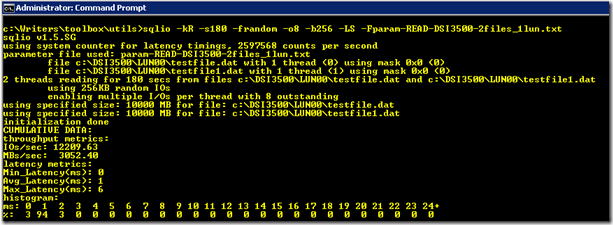

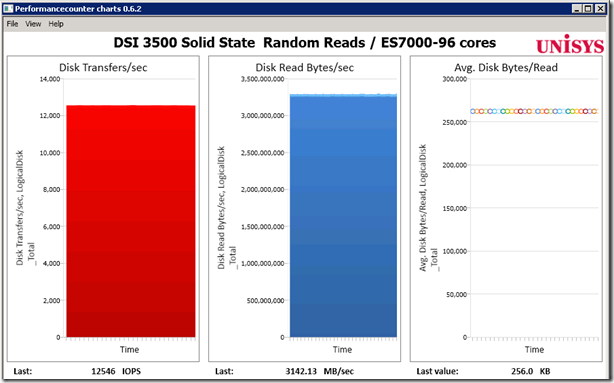

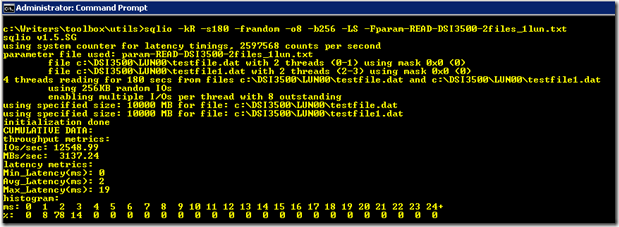

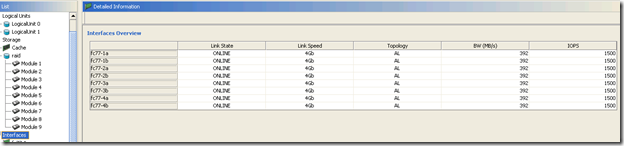

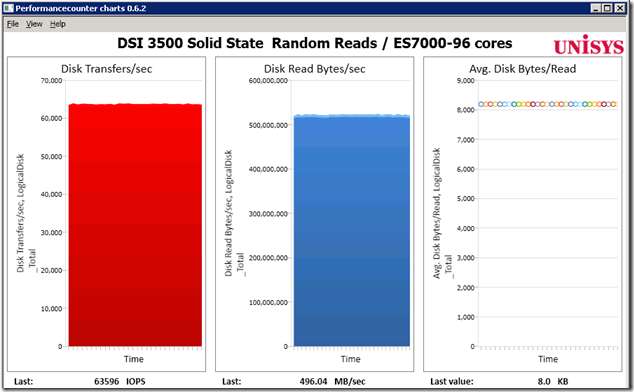

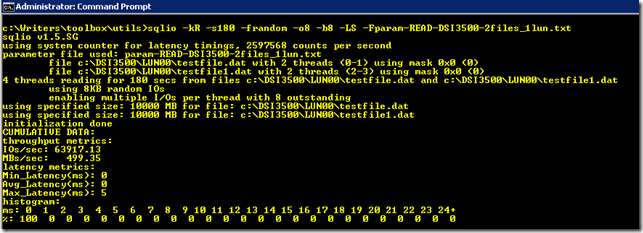

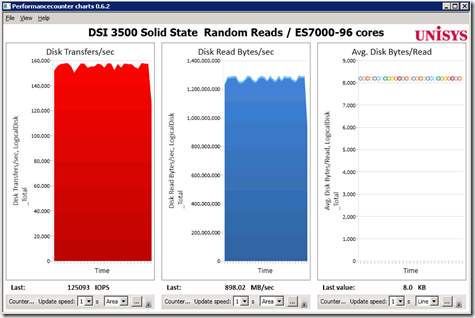

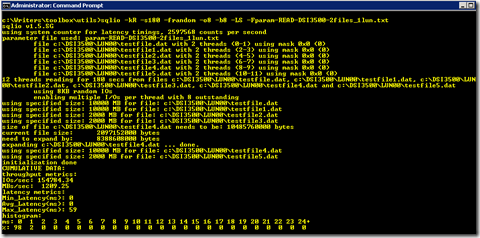

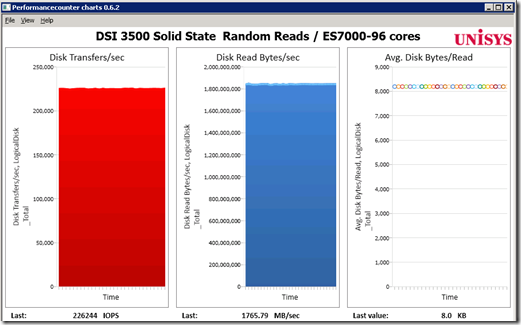

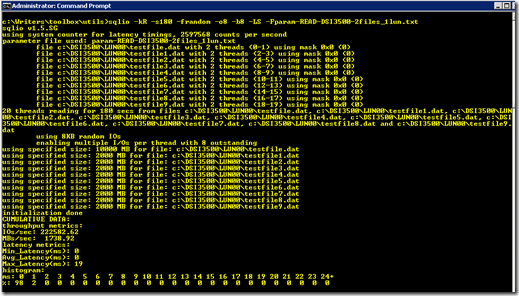

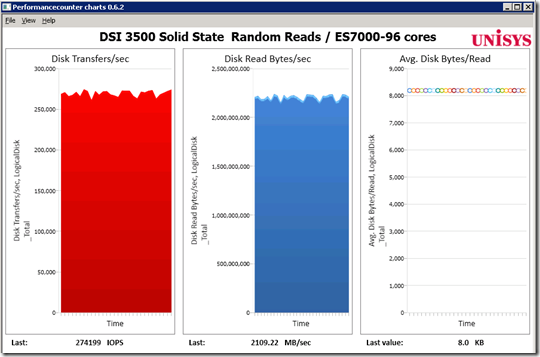

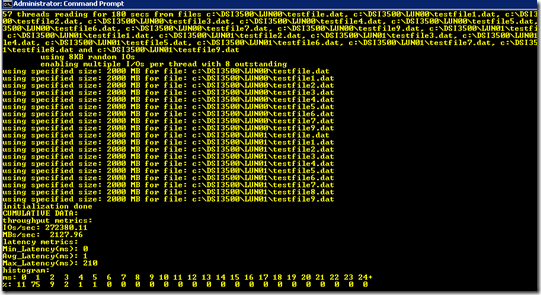

This blog post is all about squeezing the maximum out of both SQL Server and the available hardware and showing you how to measure the achieved throughput and durations the way I do. in the previous post I showed what throughput figures are possible with the SQLIO utility, now it’s time to see if we can get the same throughput with SQL Server 2008 R2 !

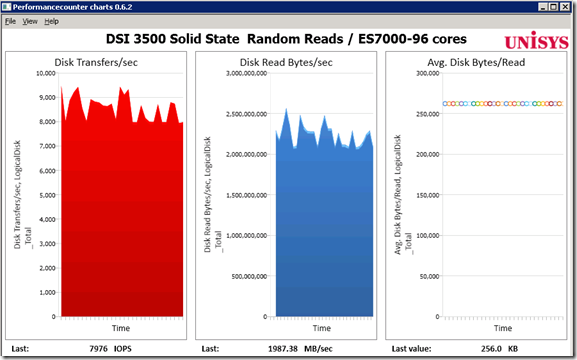

Test 3a) Table scan speed from a single table stored in a single database file

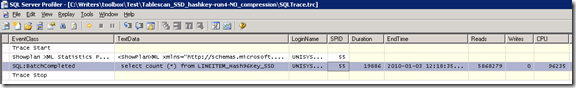

Step 1 is to test with an ‘out of the box’ configuration and see that reading a table with 400 million rows / 46 GByte of size with LineItem data takes 31.2 seconds.

The Windows Performance monitor Logical Disk counter “Disk Read Bytes/sec” shows an average of around 1400 MB/sec reading from the DSI 3500 Solid State box:

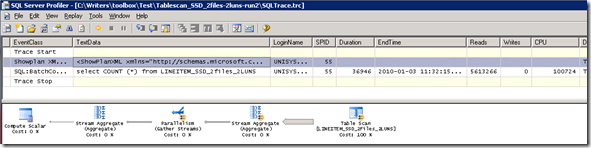

The SQL Profiler shows that approx. 5.5 million logical (8KB) reads are needed to retrieve the 46 GByte of data.

What are we waiting for?

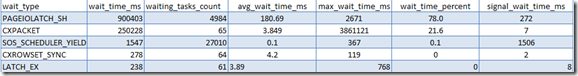

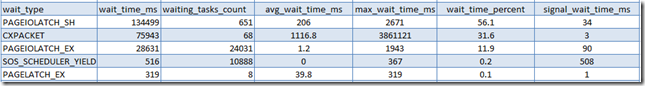

The top 2 from the Waitstats dmv (select * from sys.dm_os_wait_stats) shows 80.1% of the time SQL was waiting, is spend by LATCH_EX and 9.3% by PAGEIOLATCH_SH:

To read a page from disk and put this into cache, the worker thread will take out a EX (Exclusive) latch on the BUF associated with the page.

(See also this CSS post for more information: http://blogs.msdn.com/psssql/archive/2009/07/08/q-a-on-latches-in-the-sql-server-engine.aspx)

Or looking into the exclusive LATCH_EX wait type as described in BOL: a LATCH_EX occurs when waiting for a EX (exclusive) latch.

This does not include buffer latches or transaction mark latches. A breakdown of LATCH_* waits is available in sys.dm_os_latch_stats.

Following the books and drilling down into the latch waits with the sys.dm_os_latch_stats dmv shows the following:

On the "ACCESS_METHODS_DATASET_PARENT” latch wait type isn’t much information available, but think of this as a memory semaphore that is used to distribute the pages scanned from disk to the operators downstream in the query plan, synchronizing child dataset access to the parent dataset during parallel operations.

From several experiments I have noticed that this type of latch wait time doesn’t expose a direct relation to the overall duration of the query execution while table scanning a single table , so lets call it SQL internal housekeeping.

The PAGEIOLATCH_SH wait event occurs when a task is waiting for a latch for a buffer that is in an I/O request. When a page is not in the buffer cache, SQL Server has to first allocate a buffer page, and then puts a exclusive PageIOLatch_ex latch on the buffer while the page is transferred from disk to cache.

Meanwhile, SQL Server puts a PageIOLatch_sh request on the buffer on behalf of the user. After the write to cache finishes, the PageIOLatch_ex latch is released. This allows the user to read the buffer page after which the PageIOLatch_sh is released. The 12.1% contribution of the Buffer Latches as primary wait reason makes more sense.

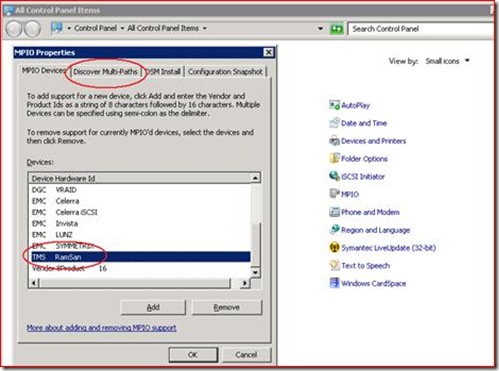

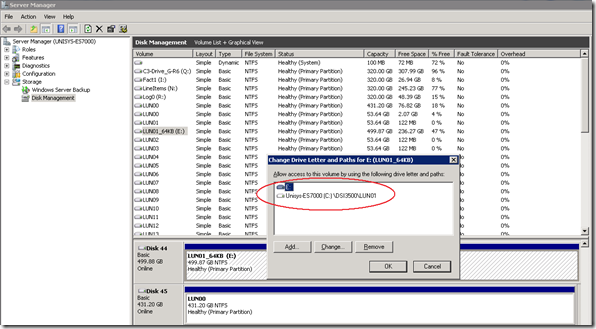

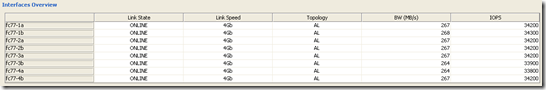

Since we know from the SQLIO tests that we use only half the available throughput bandwidth an easy option to get more I/O requests being serviced is to add more database files and more LUN’s to distribute the IO load.

Test 3b) Adding a second LUN and database file

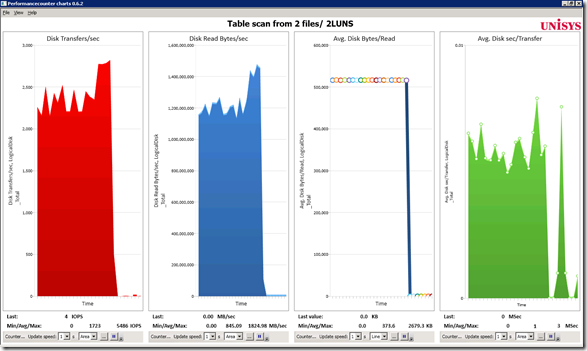

Surprisingly with the table striped over 2 database files and 2 LUNS in the same filegroup , in my tests it takes longer to read the same 400 Million rows; on average 36.9 seconds instead of 31 seconds. That’s strange… as shown with the SQLIO tests (and also by common sense) you would expect that adding a second LUN and more files would speed up data retrieval. … interesting. The graph below the average throughput (Disk Read Bytes/sec) slightly dropped to about an average of 1300 MB/sec and the disk transfer timings are average 1 millisecond.

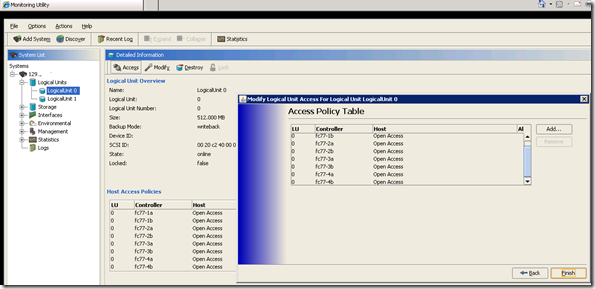

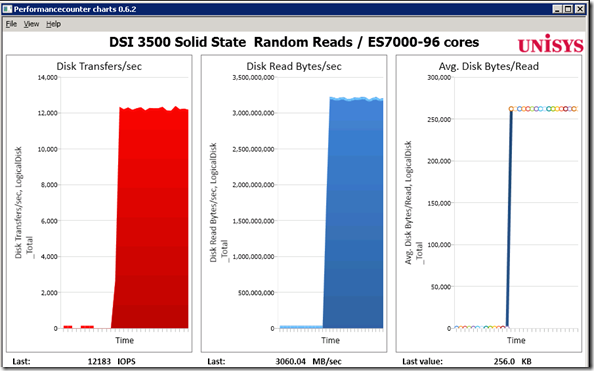

Test 3c) Table scan speed from a partitoned table with 96 partitions/24 database files and 24 FileGroups

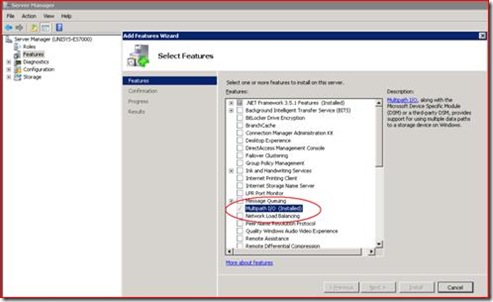

What else can we do to speed up table scanning ? About a year ago while Thomas Kejser (from he SQLCAT team http://sqlcat.com/ ) and I where preparing a presentation for the PASS European Conference 2009 conference (btw please checkout the new 2010 conference website @ http://www.sqlpass.org/summit/eu2010/ ) when we came up with the following trick to speed up the tablescanning significantly : partition the table and apply a special hashing algorithm to equally distribute the data across the partitions.

This will enable multi threading data retrieval from multiple partitions.

Steps to take:

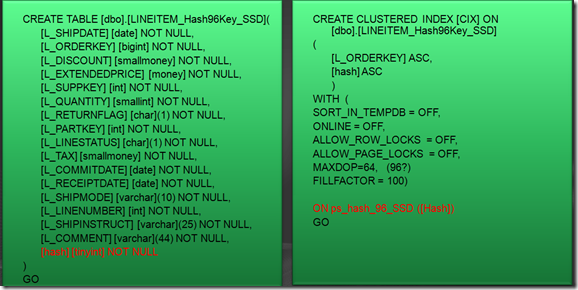

1) Optimal number of partitions should be equal to the number of available cores (96)

2) Create multiple Filegroups to hold the partitions (24)

3) Partition the tables into #cores partitions

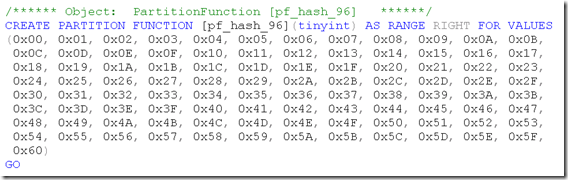

4) Use the CREATE PARTITION FUNCTION command

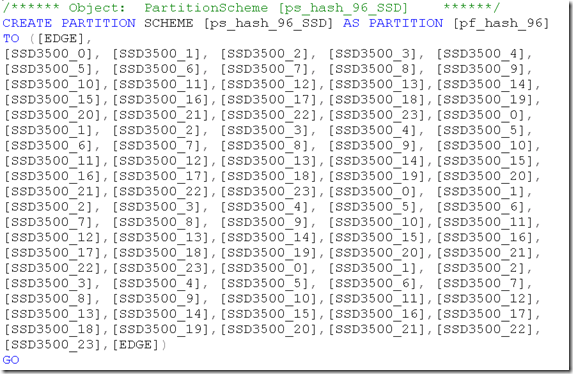

5) Use the CREATE PARTITION SCHEME command and bind the partition function to Filegroups

6) Add a hash column to the table (tinyint, just one byte per row)

7) Calculate a good hash distribution , for example, use hashbytes with a modulo function

8) Build a clustered index on the table, on the partition scheme

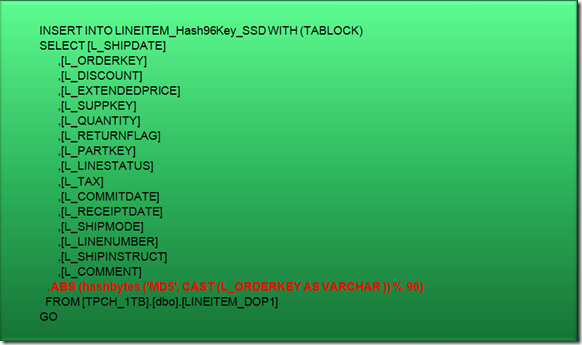

9) Insert data with some magic hashing algorithm key:

10) Check if all partitions are about equally filled:

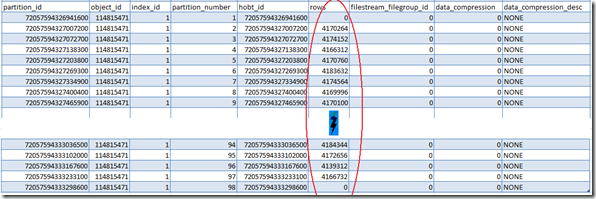

Run the Select * from sys.partitions where object_name(object_id)=’LINEITEM_HASH96key_SSD’

and check out the amount of rows per partition:

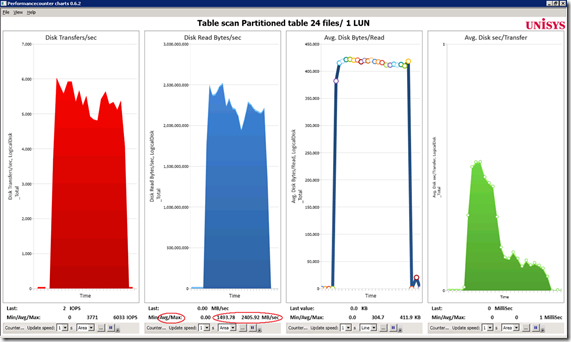

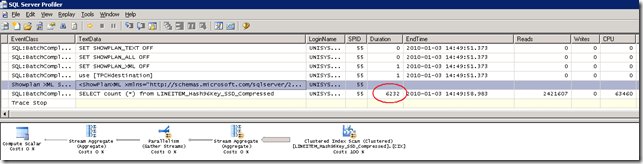

11) Rerun the command : SELECT count (*) from LINEITEM_Hash96Key_SSD

Result of table partitioning on overall throughput and duration is shown below: it takes only 19.88 seconds instead of 31.2 seconds,

so 11.3 seconds or 36% faster. That’s an interesting gain!

Waitstats

the no. 1 waittype , with 78% , is time spend by waiting for PageIOLatch_SH, with an average of 180 Millsec per event, means a disk bottleneck;

We are maxing out on our single LUN.

Latchwaits

Test 3d) Table scanning from a partitoned table with 96 partitions, 24 db files, 24 FileGroups and PAGE Compression

A last tip: enabling Page compression on the table will also speed up scanning:

command: ALTER TABLE [dbo].LINEITEM_hash96key_SSD REBUILD WITH (DATA_COMPRESSION = PAGE )

Result: we can now scan 400 million rows / 46 GByte of data in only 6.2 seconds.. or in only 20% of the time.

Wait_types

Latches:

Additional information

World’s Fastest Flash-Based SSD: The DSI 3500 Solid State: http://www.dynamicsolutions.com/main-menu/dsi3500

Unisys whitepaper: Microsoft SQL Server 2008 Data and Backup Compression: http://www9.unisys.com/eprise/main/admin/corporate/doc/41371394.pdf

Wrap up

The examples above show that SQL table scan operations can be optimized greatly; partitioning a table or applying some sort of compression makes a significant difference in overall scan speed.