Speeding up Reading from a SQL OLE DB or ADO.Net Data Source

With small datasets on a test system you will probably hardly notice the difference, but when you are building SSIS packages that need to process Gigabytes of data in a production environment on a day to day basis, the following Performance tip to optimize reading from the SQL data source is one to remember.

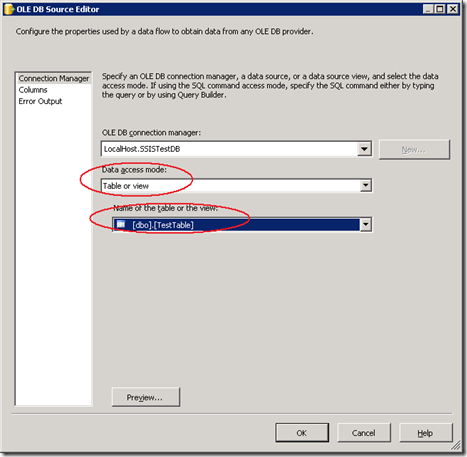

1) Data Flow OLE DB Source creation

Very likely when you select your OLE DB (or ADO.Net) Source, you pick the default data access mode “Table or view” and the table with the data you need;

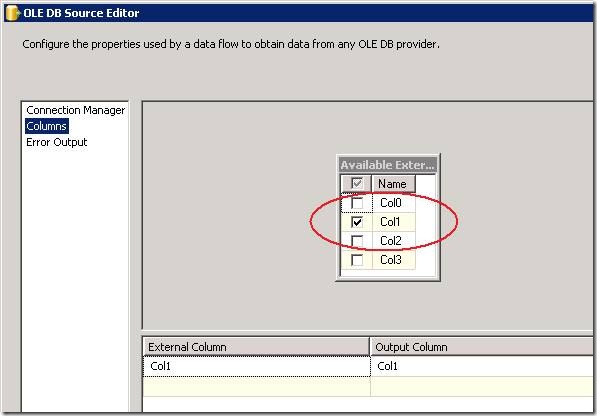

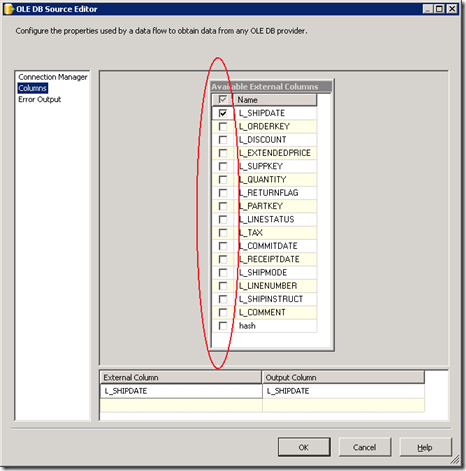

In our case, it’s a TestTable which has 4 columns, and lets deselect the columns we don’t need ;

2) Measuring the throughput

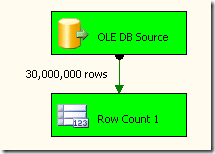

To check quickly the throughput of the Data Source use the Row Count trick; count the rows and measure the elapsed time.

When we execute the package it takes 61.2 seconds to run the SSIS package, which is like 490 000 rows /sec.

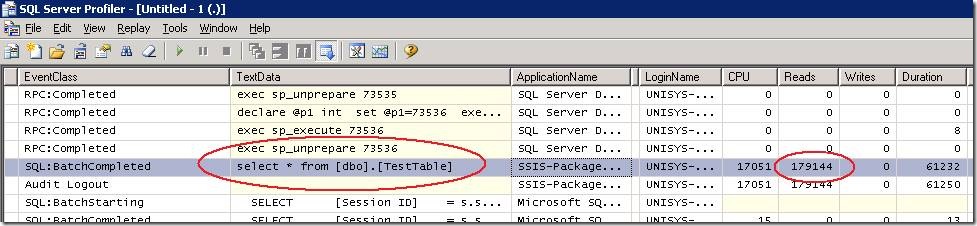

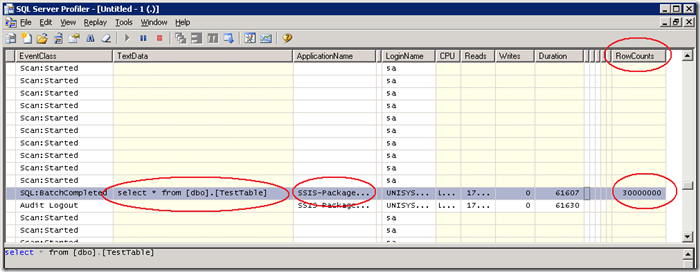

Surprisingly if you look at the SQL Profiler trace, you will notice that SQL Server executed from a SSIS package a “Select * from [dbo].[TestTable] request which took 61.2 seconds !

This is strange; we just selected Column1 to execute the Rowcount on? Or may be not…

3) Be Selective

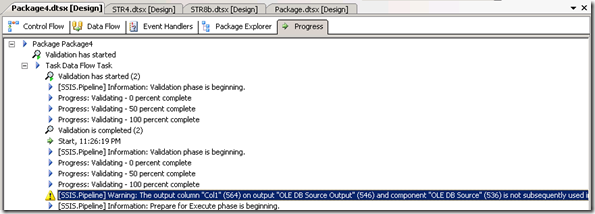

The default Table or View selection will always execute a “Select * from Heaven” and can become very costly if your database contains many columns (I will show you later the impact) but what’s important to realize is that the SSIS Progress tab will not warn you for this behavior… Typically if there are unused data columns or any room for improvement along the line you will see a warning in the Progress tab tune your package to avoid unnecessary data movement.

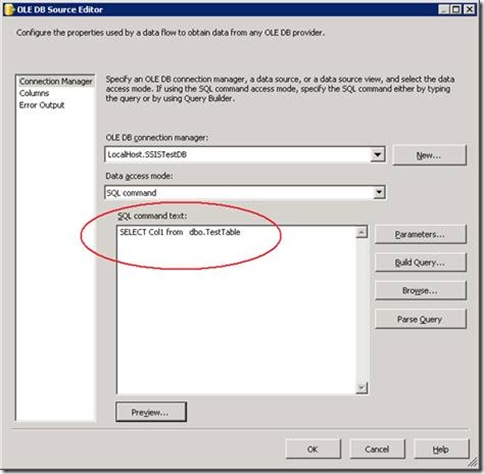

To increase the processing speed of you packages overcome the full table scans and transfer only the data that you really need. Be as selective as possible. In this case to retrieve just Col1: Select a different Data Access mode, pick “SQL Command” (instead of “Table or view”) and build a query “SELECT Col1 from dbo.TestTable”.

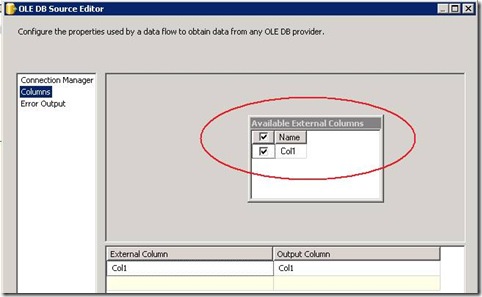

This query will only create 1 column as output which will show up in the SSIS OLE DB Source Editor as a single available external Column:

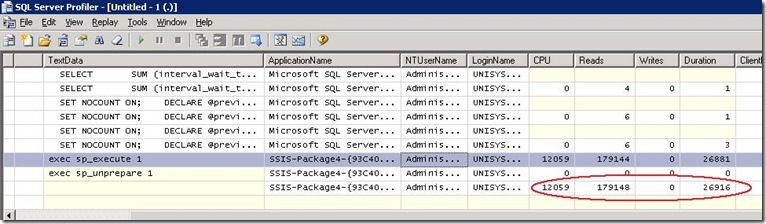

Result: with just column1 being retrieved, it still takes 179148 reads, but only 26.9 seconds to get all the data transferred, instead of 61.2 seconds :

Make it a habit to question yourselves where the untagged columns come from.

3a) Impact on Large Datasets

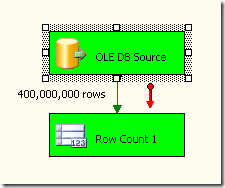

Just to show you the impact of what it take to scan a table with 400 million row , and 17 column wide data set..:

Duration: 29 minutes 55 seconds to scroll through all 400 Million columns:

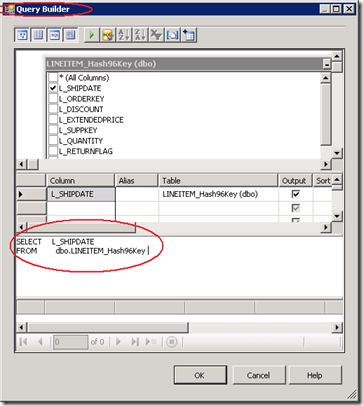

When you build a much more selective query by typing a statement or by using the Query Builder :

Retrieving the same L_Shipdates date takes only 6 minutes 17 seconds , which is 23 minutes and 38 seconds , or 79%… LESS Time!

Of course, by building you package using WHERE clauses and filter out the specific ranges you really need will make the optimization complete.

4) How to check existing SSIS Packages for the “Select * FROM heaven”

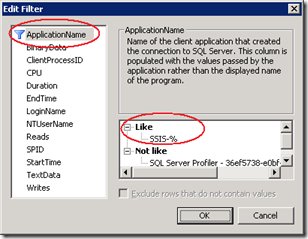

This an interesting question; at the moment I think the simplest way to get a hint where to start looking is to use a lightweight SQL Profiler trace that checks for:

1) SSIS Package executions + start date & time,

2) Table scans commands

3) The Row Count

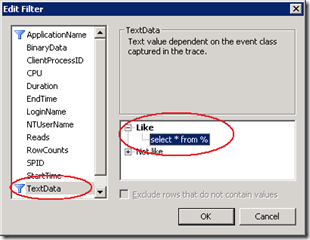

With SQL Profiler , the trace setup will look like this: Apply 2 filters by clicking the “Column Filters” button:

– “ApplicationName” Like: “SSIS-%” and

– “TextData” Like “Select * from %”

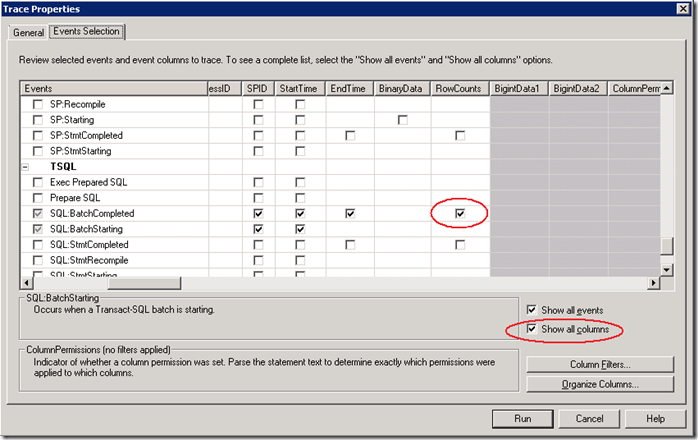

As third step, select the “Show all columns” option , look for the RowRounts column, and scroll in all the way down to the TSQL section to select SQL:BatchCompleted:

Result:

5) Additional Reading

Luckily I found one location where this tip is written down also;  on page 414 of the book with lots of insights “Professional Microsoft SQL Server 2008 Integration Services, written by my friend Grant Dickinson and other great guys like Brian Knight, Erik Veerman, Douglas Hinson, Darren Herbold.

on page 414 of the book with lots of insights “Professional Microsoft SQL Server 2008 Integration Services, written by my friend Grant Dickinson and other great guys like Brian Knight, Erik Veerman, Douglas Hinson, Darren Herbold.

Wrap up

With the walkthrough example above I showed you the impact on the overall performance when using the default SSIS “Table or view’ data source selection on small and large data set, and also the effect on the overall runtime of your SSIS packages. Check your most demanding (or long running) packages on their selectivity and narrow down the number of columns you are requesting from the database where possible. Move only the data around that you really need!

8 Responses Leave a comment

Very very good, thank you.

Very good article

Hi,

Instead of embedding sql query in the ssis package and even avoiding view to select required columns, can we create some custom components that just retrieves only selected columns(at design time).

Hi Bali,

Thanks for thinking with me on this topic; what type of custom component are you thinking of ?

To limit the output send out to SSIS you have to be as selective as possible on the SQLServer side ;

For example, you could also build a View into your database and query the view from SSIS ?

Create view FASTREAD

as

SELECT l_Shipdate

FROM dbo.lineitem_Has96Key

To my best understanding this is still the best and fastest way;

(In SQL 2012 there will be a new index type ; the column store index that significant increases querying of a specific column too ! )

Brgds, Henk

Thank you for the informative article.

I am going to check this out on an SSIS package which is giving me a lot of troubles and see how it goes.

Regards,

Manish

Hi,

Very good article ,too informative. The topic is on “Speeding up Reading from a SQL OLE DB or ADO.Net Data Source”. I dint get the difference between OLEDB and ADO.NET source components. May i know the major difference please.

Thanks,

Venkat

Hi Venkat,

Both connection types are used quite often and the techniques/tips I describe will work with both; from what I have measured, oledb is typically (~15%) faster than ado.net (please try for yourselves with the SSIS Rowcount component as listed under 2) !)

Hi,

Great exercise, however in my case this doesn’t seem to work that well. I need to extract a dataset +- 1.4 billion rows which I need to push to another table. Is there any suggestion I could try out?

Many thanks,

Lennon