How to increase the SQL Database Restore speed using DB compression and Solid State Disks

a couple of months ago I blogged about how to speed up a full database backup; but what about the restore speed? Indeed also an interesting topic to investigate. So I ran all kind of tests and found out that it is possible to restore a database at least 3 times faster provided that you have sufficient CPU’s and Disk IO capacity available.

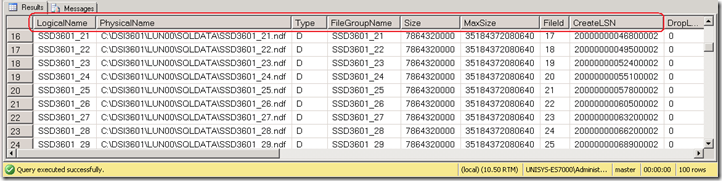

The restore tests are executed on the same hardware as the previous posts; a 96 core Unisys ES7000 server with both a DSI 3500 and DSI 3600 Solid state storage unit attached. The database to restore is an 750GB Database with 642 GB of data spread across 96 files and 96 File Groups.

Monitoring a Restore Operation

SQL Server provides little information about the restore speed when a Full DB restore is in progress so lets rely on the regular windows perfmon counters like

– Disk Read+Write Bytes/sec to measure the throughput

– Average Disk Bytes per Read+Write to determine the IO Blockize

– % Processor time of the SQLServer process.

Step 1) The “Out of the Box” Restore performance

First step is to determine the effective available hardware I/O bandwidth on the server for both reading the backup file(s) and restoring the database files.

Easiest way to measure this is by using the SQLIO utility. The restore command is using large IO’s ranging from 512 KB to over 1 MByte (up to 4) so lets run the SQLIO test with large blocks also.

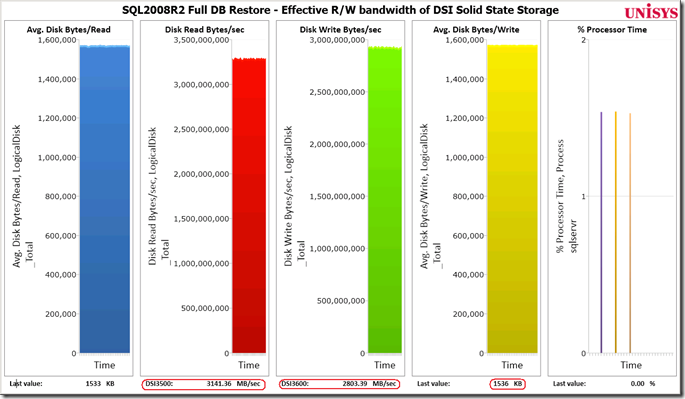

The SQLIO test shows we have a Random Read bandwidth available of over 3 GByte/sec and a Random Write bandwidth which is sustained over 2.74 GB/sec. This should be more than enough for our restore experiments.

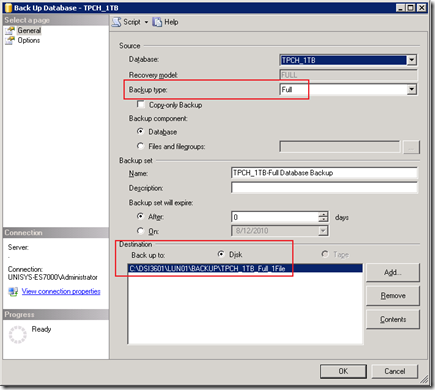

Step 2) Getting started: creating a Full database backup into a single destination file

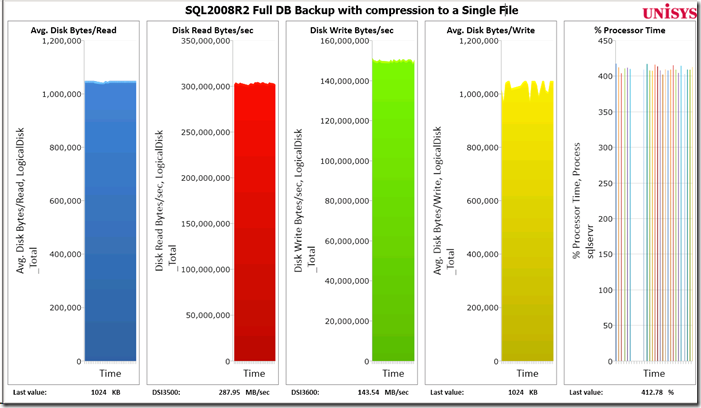

To backup the complete database with compression (84267834 pages, or 642 GB) takes 2198 sec, which is 36 minutes 38 seconds. (I capture the exact durations with SQLProfiler monitoring the Master database). The backup creates a 317 GB flat file.

Impression of the Backup speed: the database is being read with 290 MB/sec and written out to the destination file with 143 MB/sec. SQLServer is using approx. 4 CPU’s:

Step 3) Restoring the database – Reading from a single file

To quickly check the content of a backup file you can use the SSMS GUI or use the following command:

RESTORE FILELISTONLY FROM

DISK = N’c:\DSI3601\LUN01\backup\TPCH_1TB_Full_1File’

WITH FILE=1;

Through the SSMS GUI or with the following statement you can start the Restore operation:

DISK = N’C:\DSI3601\LUN01\BACKUP\TPCH_1TB_Full_1File’

WITH FILE = 1, NOUNLOAD, REPLACE, STATS = 10

GO

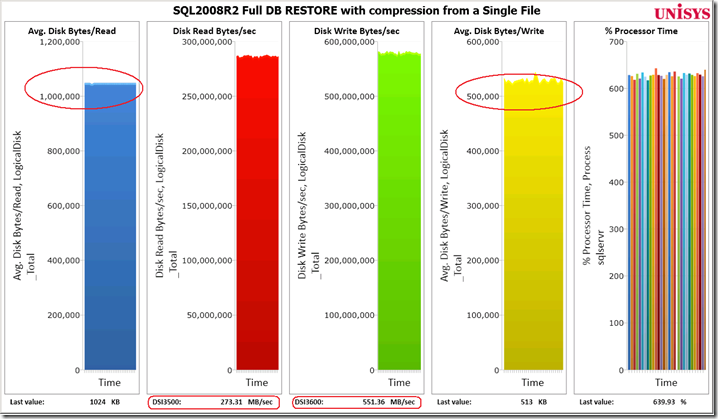

Restore from a Single File

To restore the entire database takes 19 minutes and 46 seconds. Restoring the database is significantly faster than backing it up (which took 36 min.38 sec.)

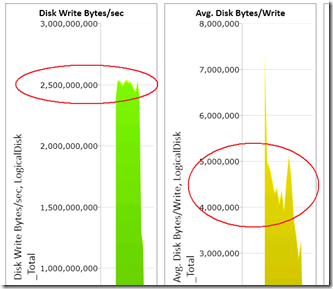

The data is read from the flat file with 1 MByte IO’s and written to SSD with 512 KB IO’s using 6-7 cores;

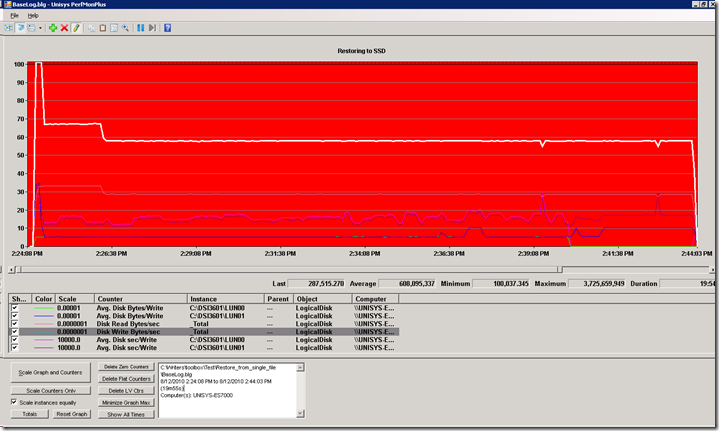

I logged the Average Disk Write Bytes/sec data into a Perfmon log and the counters show that we do not hit a hardware limit yet; the write throughput is a steady flat line which (typically) indicates that the throughput is limited by the application itself. Time to investigate our options!

Restore Performance optimization tip #1

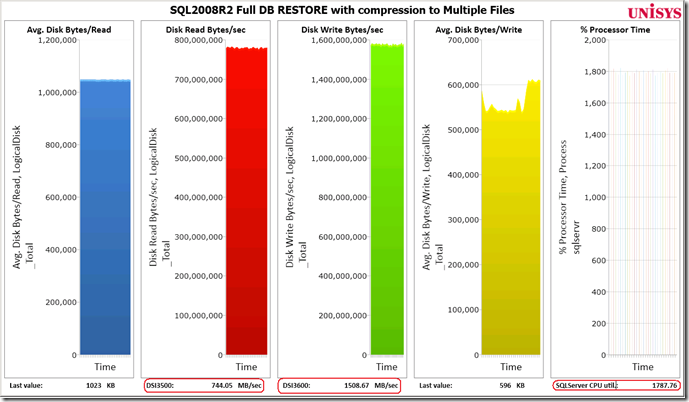

Writing out the Backup set to multiple destination files (“dump devices”) will speed up both the backup and restore process significantly.

To BACKUP the DATABASE to 9 files shows that 84267834 pages are backed up in 605.411 seconds (1087.430 MB/sec).

Restoring the database while reading from 9 backup files is speeding up the restore process; the write speed increases significantly from, on average, 600 MB/sec to 1479 MB/sec

and completes in 445.191 seconds using 18 CPU’s. This is 2.5x faster.

RESTORE DATABASE [TPCH_1TB]

FROM

DISK = N’c:\DSI3601\LUN01\backup\TPCH_1TB-Full’,

DISK = N’C:\DSI3601\LUN01\backup\File2′,

DISK = N’C:\DSI3601\LUN01\backup\File3′,

DISK = N’C:\DSI3601\LUN00\backup\File4′,

DISK = N’C:\DSI3601\LUN01\backup\File5′,

DISK = N’c:\DSI3601\LUN01\backup\File6′,

DISK = N’C:\DSI3601\LUN01\backup\File7′,

DISK = N’C:\DSI3601\LUN01\backup\File8′,

DISK = N’C:\DSI3601\LUN00\backup\File9′

WITH FILE = 1, NOUNLOAD, REPLACE

, STATS = 10

GO

Impression of restoring the full db, reading from 9 backup files:

Restore Performance optimization tip #2

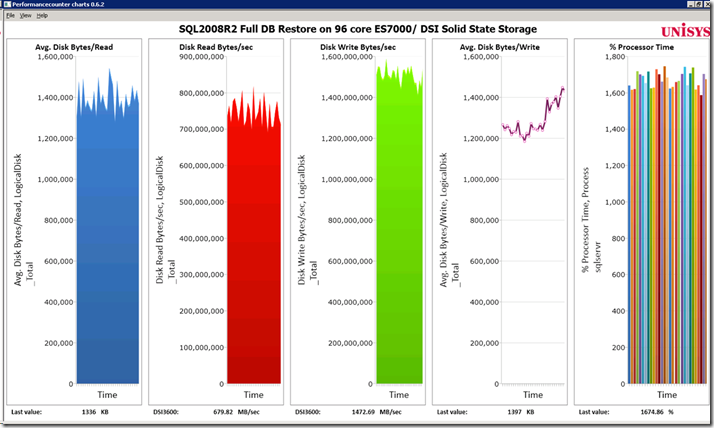

To ramp up the throughput even further experiment with specifying the BUFFERCOUNT value. This will bring the maximum throughput to our test.

Running @ Full speed the restore completes in only 5 minutes 40 seconds!

RESTORE DATABASE successfully processed 84267834 pages in 340.330 seconds (1934.423 MB/sec).

Specifying the BUFFERCOUNT value brings us another 454 MB /sec extra restore speed !

go

RESTORE DATABASE [TPCH_1TB]

FROM

DISK = N’c:\DSI3601\LUN01\backup\TPCH_1TB-Full’,

DISK = N’C:\DSI3601\LUN01\backup\File2′,

DISK = N’C:\DSI3601\LUN01\backup\File3′,

DISK = N’C:\DSI3601\LUN00\backup\File4′,

DISK = N’C:\DSI3601\LUN01\backup\File5′,

DISK = N’c:\DSI3601\LUN01\backup\File6′,

DISK = N’C:\DSI3601\LUN01\backup\File7′,

DISK = N’C:\DSI3500\LUN01\backup\File8′,

DISK = N’C:\DSI3500\LUN00\backup\File9′

WITH FILE = 1, NOUNLOAD, REPLACE

, BUFFERCOUNT = 2200

GO

DBCC traceoff(3605, 3004, 3014, 3213, –1)

GO

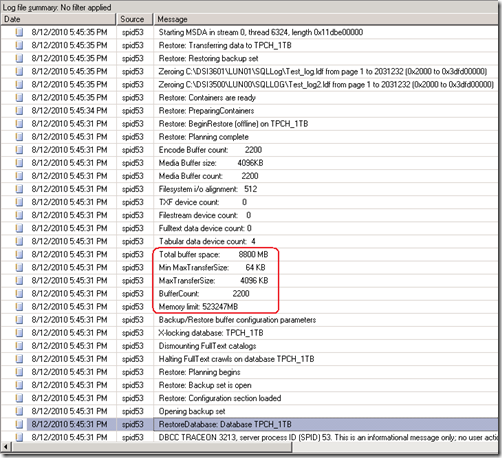

Impression of the various metrics while restoring @ maximum speed:

Observations

If you would like to gather more information about the backup and restore operations you can enable, (in your test environment only of course), the following trace flags:

DBCC traceon(3605, 3004, 3014, 3213, -1). The information will be logged into the SQL Errorlog.

Media Set BLOCKSIZE option

During the restore experiments I noticed that using the Media Set BLOCKSIZE option is a serious performance killer.

Also this option does not show up in the Log; I was assuming that this option would somehow affect some of the settings like the “MinMaxtranferSize” but it doesn’t.

With the BLOCKSIZE set to 65536, the restore suddenly takes more than 8 minutes and the average write throughput is down to 1400 MB/sec ;-(

with the BLOCKSIZE set to 32768, the restore takes more than 10 minutes to complete.

In this PSS article I read that SQL2005 SP2 is capable of writing 1 MByte IO’s during restore operations to reduce file extension needs and NTFS metadata maintenance.

Increasing the MAXTRANSFERSIZE option seems to affect the write IO block size, it shows an increase from on avg. 600-800 KByte to 1MB or more, but during my experiments the mileage varied.

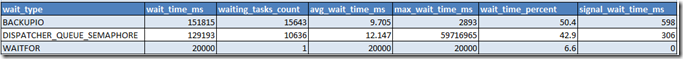

Sampling the SQL sys.dm_os_wait_stats for 20 seconds:

Explanation of the wait stats;

BACKUPIO: Occurs when a backup task is waiting for data or is waiting for a buffer in which to store data: unfortunately increasing the buffercount even further does not help to reduce this number 1 wait. Maybe adding more LUN’s will help.

DISPATCHER_QUEUE_SEMAPHORE: Occurs when a thread from the dispatcher pool is waiting for more work to process.

Zeroing out the log files at the beginning of the restore happens extremely fast with very large 4-5 MByte writes:

Restoring on a File or FileGroup level

Besides the Full Database restore option, you can also restore on a File- or FileGroup basis. I ran a couple of tests to see if these options will restore even faster.

Single FileGroup restore

To restore a single File Group (6.7 GB / 877928 pages) takes 7 minutes and 7 seconds (17.3 MB/sec). With Buffercount=1100 specified it takes only 3 minutes 12 seconds (41.2 MB/sec). A single FileGroup restore is using up to 17 CPU’s so it wouldn’t be an option to restore multiple FileGroups in parallel to speed up the restore operation. Also because you cannot start 2 FG restore tasks in parallel: When you start multiple FileGroup restore tasks you receive the message “Exclusive access could not be obtained because the database is in use”. Restoring them all in sequence 1 by 1 would have taken almost 5 hours.

But… you can specify All FileGroups to restore them all at once. Restoring all 97 FileGroups (including the primary) provide similar performance as a regular Full DB Restore:

Best result : 5 minutes 39 seconds. (RESTORE DATABASE … FILE=<name> successfully processed 84267834 pages in 339.174 seconds (1941.016 MB/sec)).

You can check the status of the Filegroup afterwards with the following command: “SELECT name,state_desc FROM sys.database_files” ;

If you forgot to restore one or more Filegroups, the “State_desc” status will be “RESTORING” instead of “ONLINE”.

Wrap-Up

The tricks to speed up a database Backup also apply to increase the Restore speed; simply add more backup destination files and specify a high Buffercount value.

This will bring you up to 3.3x faster Restores. In my tests the restore time of a 462 GB Database decreased from roughly 20 minutes to less than 6 minutes.

Provided you have sufficient CPU’s (on average 23, up to 34 CPU’s are in use by SQLServer) and fast Solid State Storage to keep up with the IO throughput, SQL Server 2008R2 will leverage the available CPU’s and IO bandwidth very well to restore databases quickly.

4 Responses Leave a comment

Another great post Henk!

How did you decide what the buffercount should be? Did you see anything that would lead you to a different value if you had different a) cpu count b) disk I/O throughput or c) memory available?

Cheers, James

P.S. Will we be seeing you at SQLBits?

Hi James, thanks :-)

while checking the output of the traceflags I noticed the default memory buffersize during a backup/restore is only a couple of Megabytes in size. This seemed a bit small to me when moving Gigabytes around, so I just experimented whith larger values.

1) CPU’s: up to 34 CPU’s are in use (out of 96 available). Contribution per CPU seems pretty lineair.

2) Disk I/O: Same ; not hitting a physical I/O bottleneck yet, but I do believe there might be a change that the throughput gets limited by the no.of LUN’s in use (up to 4 in my experiment). This might be worth the try to increase…

3) Memory usage: Restore buffer cachesize is 8800 MB. (Out of 512 GB available)

When time permits I will run some code profiling traces to see what’s going on under the hood..

The SQLBits program looks also this time more than amazing! Great topics and speakers; I might join you if my schedule permits…

Cheers, Henk

Hi Henk,

great series of articles, you really are a lucky guy to have such a toy to play with :)

I was wondering if you have given the SQL service account the privilege “Perform volume maintenance taks” to allow SQL server to skip zeroing out the data files when restoring. You didn’t seem to mention it here and it can further improve restore speeds (as well as autogrowth).

Cheers

William

Hi William,

thanks for your question; yes, I do run with the “Perform volume maintenance task” permission enabled but both LOG files are always zeroed out according the traceflag output; It takes approx. 1 minute for both 15 GB log files for the “Zeroing completed” message appears in the SQL Server log. A best practice is Not to oversize log files to save restore time.

-Henk