Introduction

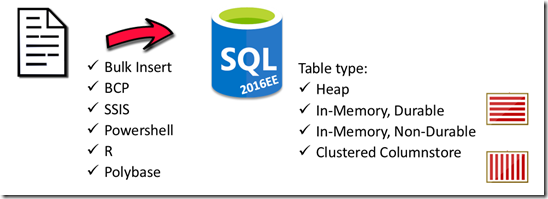

With the release of SQL Server 2016 Service Pack 1 the In-Memory ColumnStore technology is now also available in the Standard, Web and even Express and LocalDB Editions. Besides the benefit of only 1 codebase to maintain, this change in policy will also become a clear disk storage space saver due to its high data de-duplication and compression ratios and, last but not least, it’s also a serious ad-hoc query performance booster!

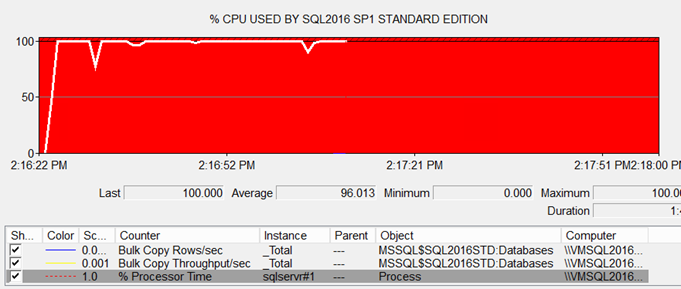

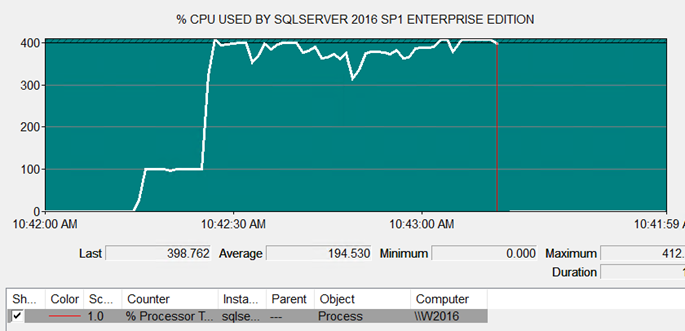

The main difference between the SQL flavors is how much CPU power and memory is allocated to tasks like (re-)building of the Clustered ColumnStore Index. For example: with the Standard Edition a single core (=max. 100% Processor time of the sqlservr process) is being used and querying a CCI happens with a maximum of 2 CPU’s (MAXDOP=2), versus leveraging all available CPU’s in Enterprise Edition.

Building a Clustered ColumnStore Index (CCI) with SQL Server 2016 Standard Edition:

Building a CCI with all 4 available cores with SQL Server 2016 Enterprise Edition:

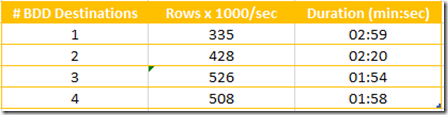

The base timings for loading 7.2 GB/~60 Million rows from a single TPCH lineItem files doesn’t show much of a difference between the flavors when Bulk inserting the data direct into either a heap table or a table with a CCI; the difference become clear when we compare the time needed to build a CCI on a heap table or rebuilding a CCI:

To summarize, the absolute fastest way to have data available in a table with a Clustered ColumnStore Index is to: load into heap + build the CCI afterwards with SQL 2016 Ent. Ed.

Direct load into CCI

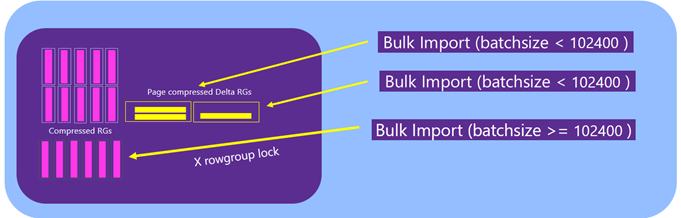

For tables with a Clustered ColumnStore Index already created make sure that you stream directly into Compressed Row Groups to maximize throughput. In order to do so, the Insert batch size should be equal or larger than 100K Rows (102400+ to be precise).

Smaller batches will be written into compressed delta store tables first before being tuple moved into its final compressed Row Group segments, which means SQL Server has to touch the data twice:

There are various options to load data and we will go over the most frequently used ones, like the Bulk Insert command, BCP and SSIS. Let’s see what is needed to get best performance and how to monitor!

CREATE TABLE [dbo].[LINEITEM_CCI] ( [L_SHIPDATE] [smalldatetime] NOT NULL, [L_ORDERKEY] [bigint] NOT NULL, ... [L_SHIPINSTRUCT] [varchar](50) COLLATE Latin1_General_100_BIN2 NOT NULL, [L_COMMENT] [varchar](255) COLLATE Latin1_General_100_BIN2 NOT NULL ) go -- Create CCI: CREATE CLUSTERED COLUMNSTORE INDEX [CCI_IDX] ON [dbo].[LINEITEM_CCI] WITH (DROP_EXISTING = OFF, COMPRESSION_DELAY = 0) ON [PRIMARY] go

1) T-SQL Bulk Insert

Let us start with a the BULK INSERT command:

BULK INSERT [LINEITEM_CCI] FROM 'F:\TPCH\lineitem.tbl' WITH ( TABLOCK, FIELDTERMINATOR = '|', ROWTERMINATOR = '\n',

-- Batchsize = 102400

);

Checking Data Load Progress

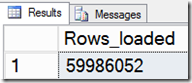

To check the Number of Rows that already got loaded into the CCI, even when the Table Lock option is being used, query a new dmv called ‘sys.dm_db_column_store_row_group_physical_stats’:

-- Check data loading progress on bulkinserts: select SUM (total_rows) as Rows_loaded from sys.dm_db_column_store_row_group_physical_stats where object_id = object_id('LINEITEM_CCI1')

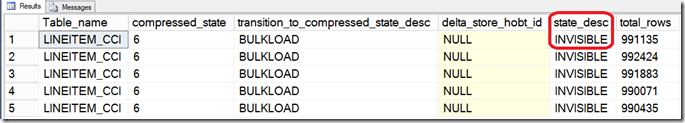

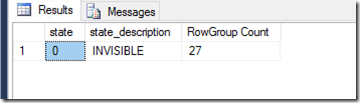

This DMV will also reveal the possible Resource Group states in more detail while loading. There are four possible Row Group states while loading data. When you see the state ‘INVISBILE’ like in the picture below means that data is being compressed into a RowGroup.

0: INVISIBLE (= RowGroup is in the process of being built from data in the delta store)

1: OPEN (= RowGroup is accepting new records)2: CLOSED (= RowGroup is filled but not yet compressed by the tuple mover process) 3: COMPRESSED (= RowGroup is filled and compressed).

4 = TOMBSTONE (= RowGroup is ready to be garbage collected and removed)

SELECT object_name (object_id) as Table_name, transition_to_compressed_state as compressed_state, transition_to_compressed_state_desc, delta_store_hobt_id, state_desc, total_rows from sys.dm_db_column_store_row_group_physical_stats where object_id = object_id('LINEITEM_CCI') order by closed_time desc

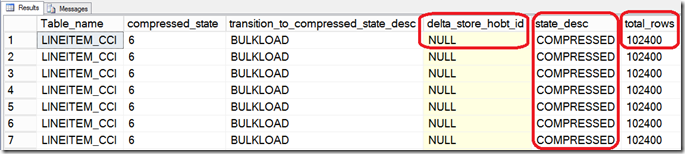

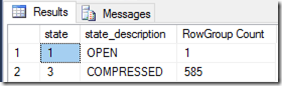

By specifying the Batch Size with a value of 102400 or higher you will achieve maximum performance and data will get streamed and directly compressed into its final RG; this behavior will show up as ‘COMPRESSED’.

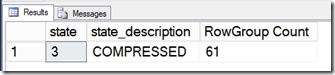

You can also check a DMV that got introduced with SQL2014 to check on the RowGroup State, which is the sys.column_store_row_groups DMV:

-- Check the ColumnStore RowGroup state: select state, state_description, count(*) as 'RowGroup Count' from sys.column_store_row_groups where object_id = object_id('LINEITEM_CCI') group by state, state_description order by state;

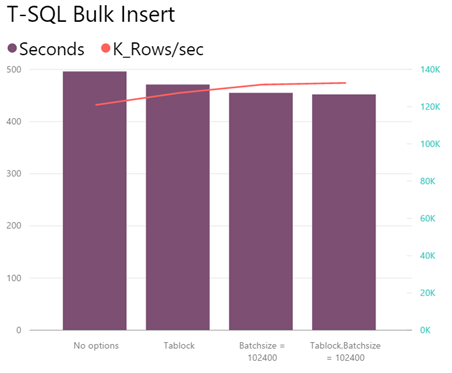

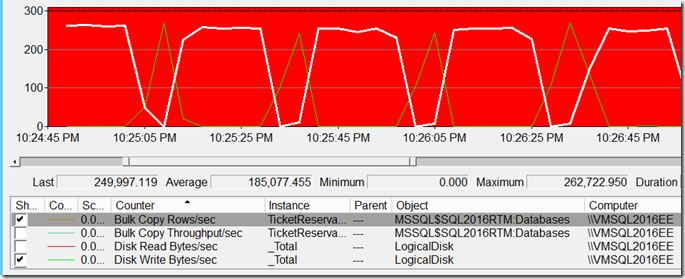

Test Result

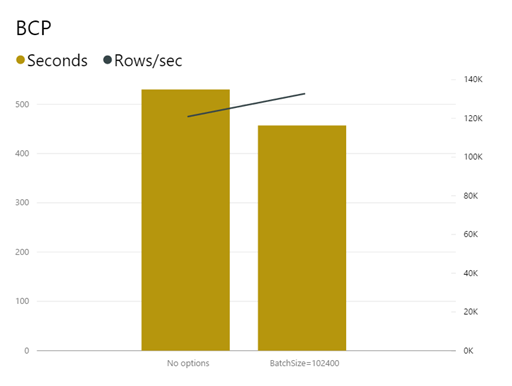

Bulk inserting data into a table with CCI via the Bulk Insert command can slightly be improved by adding the ‘Batchsize=102400’ and TABLOCK options. This brings an ~8% improvement in throughput.

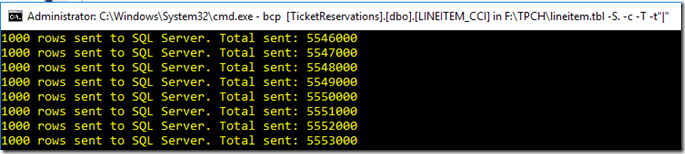

2) BCP.exe

The BCP utility is still being used quite heavily in many production environments so worth to check on it quickly: by default, the BCP sents 1000 rows at the time to SQL Server.

The time it takes to load 7.2GB of data via BCP: 530 seconds , or 113K rows/sec

The RowGroup state shows ‘ÍNVISIBLE’ which means that with the default settings the Delta Store is being used.

To make sure the BCP command streams the data directly into the compressed RG’s you have to add the batchsize ‘–b’ option with a value of at least 102400. I ran various tests with larger batch sizes: up to 1048576, but the 102400 gave best me the result.

Command:

BCP [DB].[dbo].[LINEITEM_CCI] in F:\TPCH\lineitem.tbl –S . -c -T -t"|" -b 102400 –h tablock

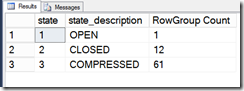

The RowGroup state now shows ‘COMPRESSED’ which means we bypass the Delta Store and data streams into the compressed RG’s:

Result: the BCP completed in 457 seconds, or 133K rows per second or ~14% faster:

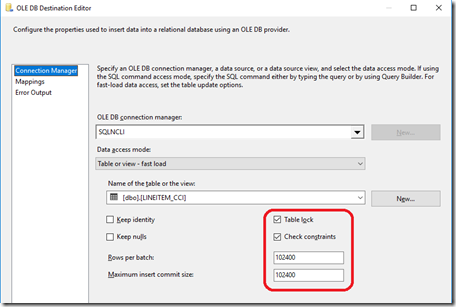

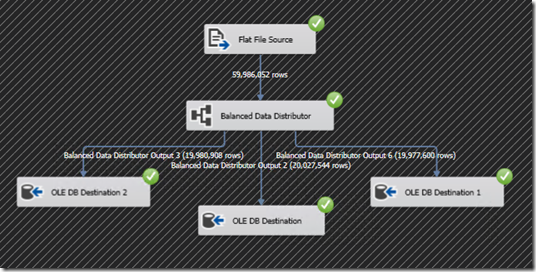

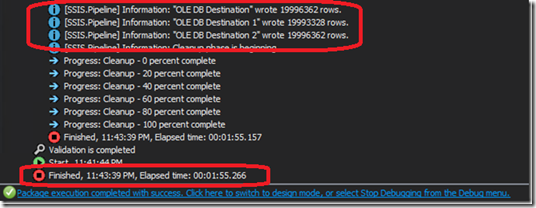

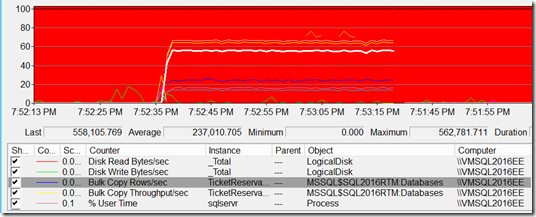

3) SSIS

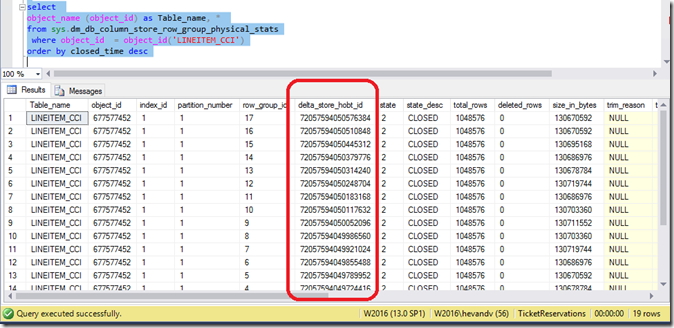

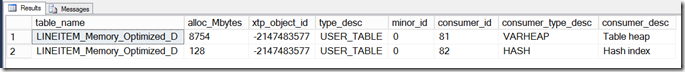

During testing I noticed that the default SSIS 2016 settings use memory buffer sizes that can also potentially limit the batch size to become less than 100K Rows. In the example below you see that data landed in delta stores: the RG states are ‘Closed’ and the ‘delta_store_hobt_id’ fields are populated, which means the delta stores are leveraged.

-- Monitoring CCI: -- Look at RG's / delta stores in use? select object_name (object_id) as Table_name, * from sys.dm_db_column_store_row_group_physical_stats where object_id = object_id('LINEITEM_CCI') order by closed_time desc

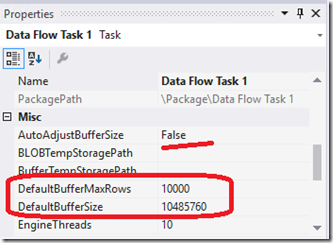

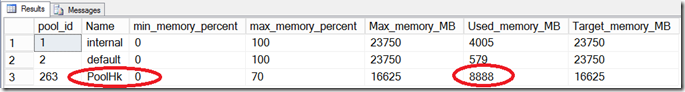

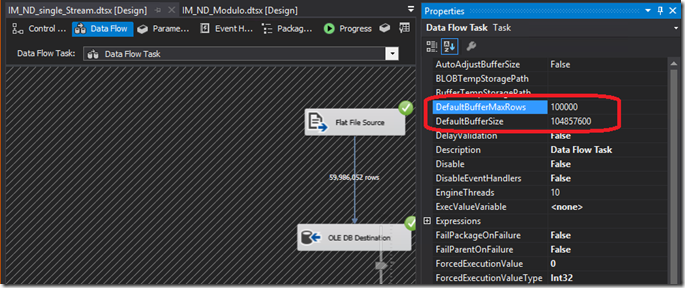

This was the moment to reach out and check with to my colleagues who luckily have noticed this to and a solution is already there! (see: “Data Flow Buffer Auto Sizing capability benefits data loading into CCI”). To fully leverage the CCI streaming capabilities you have to increase the Default memory BufferSize & MaxRows settings:

Change the these into 10x larger values:

– DefaultMaxBufferRows from 10000 into 1024000 and the most important one:

– DefaultBufferSize from 10485760 into 104857600.

Note: the new ‘AutoAdjustBufferSize’ setting should be set to ‘True’ when you load very wide Rows of data.

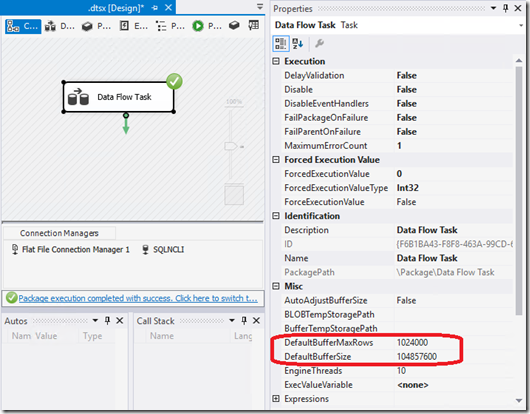

Change also the values for the Destination adapter:

– Rows per Batch: from none into 102400

– Maximum Insert commit size: from 2147483647 into 102400

Wrap-Up

The feature parity introduced with SQL Server 2016 SP1 opens up a whole new range of possibilities to benefit from! Hopefully the walkthroughs above help you to max out Bulk Insert, BCP and SSIS performance when loading data into a Clustered ColumnStore Index!

loading...

loading...