With the release of CTP3 SQL Server 2016 and its native In-database support for the open source R language you can now call both R, RevoScaleR functions and scripts directly from within a SQL query and benefit from multi-threaded and multi-core in-DB computations. Yet another SQLServer milestone! The R integration brings a lot of benefits and new scenarios to your end users; it brings the utility of data science to your applications without the need to ‘export’ the data to your R environment!

Installing & Enabling SQLR integration

To get started you will need to install and configure SQL Server 2016 CTP3, Revolution R Open (RRO) and Revolution E Enterprise (RRE) on the server.

The versions I installed:

– SQL Server 2016 (CTP3.0) – 13.0.700.139 (X64)

– Revolution R Open, The Enhanced Open Source R distribution (R version 3.2.2 (2015-08-14) )

– Revolution R Enterprise for SQL Server 2016 Community Technology Preview (CTP) 3 – (7.5.0)

(To download the bits: https://msdn.microsoft.com/en-us/library/mt604847.aspx)

The R integration uses a new service called ‘SQL Server Launchpad’ to ‘launch’ Advanced Analytics Extensions processes; it enables the integration with Microsoft R Open using standard T-SQL statements. (Disabling this service will make Advanced Analytics features of SQL Server unavailable).

To enable the R integration, you have to run the sp_configure ‘external scripts enabled’ command and grant permissions to users to execute R scripts via the new db_rrerole role:

sp_configure 'external scripts enabled', 1

reconfigure

GO

alter role db_rrerole add member HenkR;

Connecting to SQL Server from your favorite R environment

Traditionally you would connect from your favorite R IDE to SQL Server to retrieve data for analytics; or you can use the R GUI environment that is part of the installers bits. The default installation path to RGUI is: “C:\Program Files\RRO\RRO-3.2.2-for-RRE-7.5.0\R-3.2.2\bin\x64\Rgui.exe”. Also popular is the R Studio Desktop that is separate downloadable from: www.Rstudio.com. (the version I installed to get started is V0.99.486).

1) Connecting R to SQLServer

To connect your RStudio environment to SQL Server the traditional way to read data (and import it into memory or a local *.xdf file) would like something like this: (it requires to setup a proper connection string;

use the ‘hostname\\instancename’ to connect to a SQLServer named instance and pull some data from a table:)

sqlServerConnString <- “Driver=SQL Server;Server=SQL2016EE\\SQL2016CTP3;

Database=Oldskool_DB;Uid=sa;Pwd=MyPassword”

sqlServerDataDS <- RxSqlServerData(sqlQuery =

“SELECT * FROM LINEITEM_Small”,connectionString = sqlServerConnString )

2) Offloading R scripts to SQLServer

Pulling a large dataset from a database and processing it locally would have required the writing into a local file which is a single threaded process. This can take a long time… So luckily with the CTP3 we can now bring the R script to the Data and process it there! To execute and R script directly from an SQL query use the new ‘sp_execute_external_script’ to, for example, calculate the mean of L_Quantity via R:

EXECUTE sp_execute_external_script @language = N'R' , @script = N'OutputDataSet <- data.frame(mean(InputDataSet[,1]))' , @input_data_1 = N'SELECT L_QUANTITY from LINEITEM_small as L_Quantity ' WITH RESULT SETS ((col int not null)); go

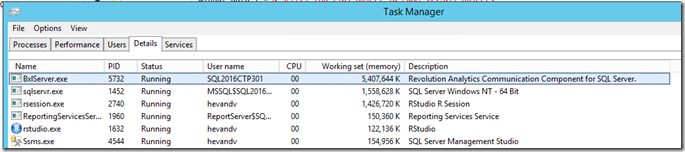

SQL Server will execute this external script via its new launchpad service in the BxLserver.exe process (The Revolution Analytics Communication Component for SQL Server).

R Memory allocation

What would an article about ‘R’ be without a note about memory usage!

By default the SQLR integration service in the CTP3 build will use up to 20% of the total physical memory available on the host for all its In-DB running R sessions. You can monitor the Memory usage of the BxLserver.exe process via the Taskmanager Working Set memory column.

If you cross the line your query will fail with a message similar to the one below:

Msg 39004, Level 16, State 20, Line 8

A ‘R’ script error occurred during execution of ‘sp_execute_external_script’ with HRESULT 0x80004004.

Msg 39019, Level 16, State 1, Line 8

An external script error occurred:

Error in sqlSatelliteCall() :

Calls: sqlSatelliteCall -> .Call

Execution halted

Invalid BXL stream

STDOUT message(s) from external script:

Failed to allocate or reallocate memory.

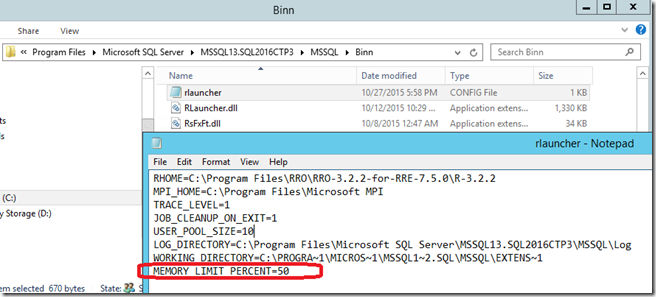

The resolution for running out of memory like this would be to increase the max. memory allocation which is a setting in the Rlauncher.config file (which is located in C:\Program Files\Microsoft SQL Server\MSSQL13… \MSSQL\Binn directory). Increase the default setting to a value that is still safe so it will not interfere with the sqlserver memory allocation.

The last line in the file ‘MEMORY_LIMIT_PERCENT=20’ controls the maximum percentage of physical memory which gets allocated to all the R sessions. Increase the value to allow the R service to consume more memory:

Wrap-Up

Embracing and operationalizing R functionality via the new SQLServer R Services integration has become very simple! It brings a lot of new possibilities to apply advanced analytics to your SQL data, ranging from data exploration to Predictive Modeling. Getting started with R might be a bit challenging but it will enrich your reports for sure!