For benchmarking purposes I was restoring a backup taken on a 4 node APS onto an 8 node APS (Microsoft Analytics Platform System). And the unexpected happened; I started to receive warnings that the system was running low on available disk space! (For those of you who never worked with APS; an 8 node full rack APS can store hundreds of terabytes!).

It turned out that some colleagues had been way too generous in allocating database space. To me, this was an opportunity to explore the storage management and I learned something new about restoring APS databases which makes perfectly sense when you get it! Below a walk through and recommendations in case you have to restore an APS database yourself.

The APS database to Backup

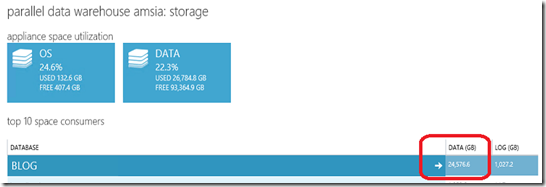

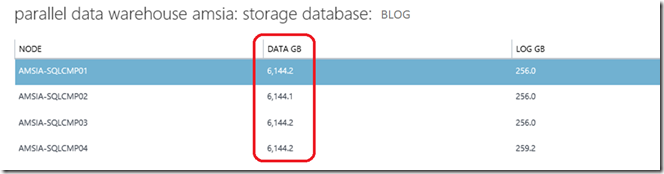

The database to move onto a larger APS is 24 TB in size and is called ‘Blog’:

The database is distributed in 4 equally sized portions across the 4 compute nodes SQLCMP01-SQLCMP04:

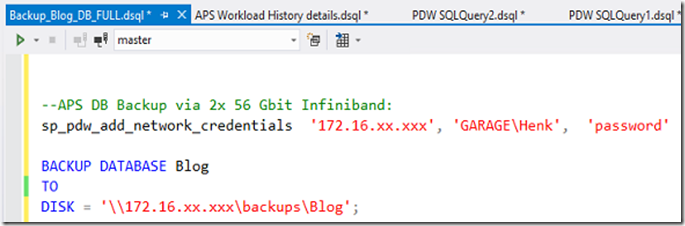

Backup of an APS database

To backup the database we have to assign access permissions to a windows account that has write access to a file share on a target server via the ‘sp_pdw_add_network_credentials’ command. On the backup server you have to set up a file share called ‘backups’ and grant R/W permissions. And then, start the ‘backup database’ command.

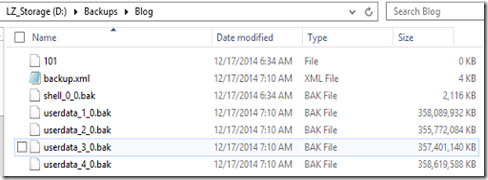

The Full backup to the remote file share completed in 36minutes 14seconds and resulted in a backup set of 1.3TB on disk. The dataset from each compute node was saved in parallel into separate userdata_x_0.bak files. (Primary bottleneck is the write throughput of the file share we are writing to: ~640 MB/sec throughput). (See Fig.4)

Restoring an APS database

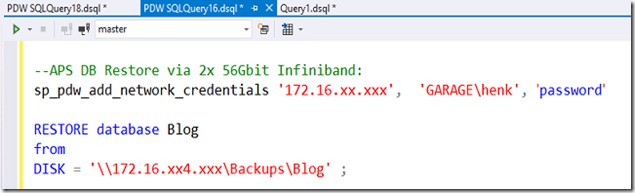

To restore this backup onto another APS, we just have to do the same: assign access permissions to the backup server and start the ‘Restore database’ command.

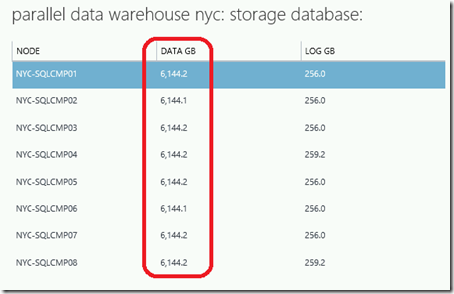

Although it was a straight forward backup & restore exercise executed according the book, I noticed the following: a backup set that gets restored onto an appliance with a larger number of compute nodes will grow in allocated database size. Its in proportion to the number of Compute nodes: SQL Server APS initially restores the database initially onto 4 nodes to match the source configuration, and then internally redistributes the data onto all 8 nodes. On the extra nodes identical datasets are allocated. (See Fig.6).

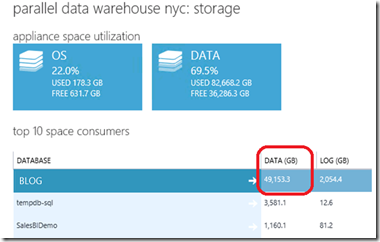

The restore operation resulted in a database with twice the original size on the larger destination system:

How to check data volume in use

There are a couple of simple steps to check on the actual disk space allocated within the database and how to free up over-allocated space in the database.

DBCC PDW_SHOWSPACEUSED

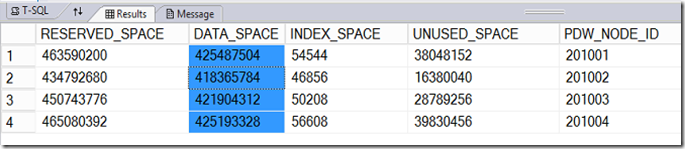

To check on the actual allocated Data you can run the DBCC PDW_Showspaceused command.

USE BLOG; DBCC PDW_SHOWSPACEUSED;

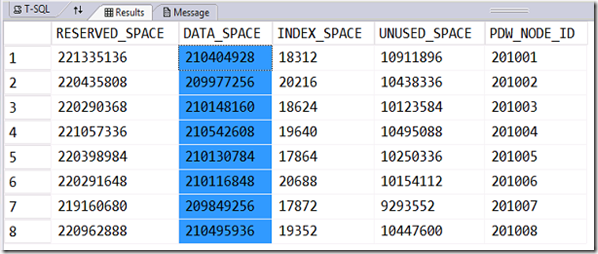

This command outputs per compute node the allocated space in kilobytes. In our case approx. 1.6 TB of compressed Clustered Columnstore data resides inside the database; the allocated 24TB for the Database is therefor a bit overkill and we can safely free up some of the space.

To free up space we can use the ALTER DATABASE command and define the desired new space allocations. Also, we can shrink down the transaction log to a minimal size (also to minimize the time spend on log files recreation). To change the database size to a max. of 5TB and 5GB log:

ALTER DATABASE BLOG SET ( AUTOGROW = ON , DISTRIBUTED_SIZE = 5000 GB ) DBCC SHRINKLOG ( SIZE = 5000 MB );

Auto redistribution of data during Restore

After the initial restore into the 4 compute nodes completed, a ‘Create table as select’ CTAS command is issued and all the distributed tables are automatically redistributed across all 8 compute nodes; the DBCC PDW_Showspaceused output shows the data got nicely spreads across all the nodes:

Result of the Restore onto 8 compute nodes

Wrap-up

Even on an APS system that has the capacity to store hundreds of terabytes it may be worth to check on the overall space allocated to databases every now and then. Also when planning for restoring a SQL database onto a larger APS please check the overall database size upfront to calculate the space needed on the destination APS since it will grow –at ratio-. If needed, you can free up unused space from the database and its log files upfront by running the ‘ALTER Database’ and DBCC shrinklog command.