Reading as fast as possible from a table with SSIS (Part II)

Recently I blogged on how to be as selective as possible while reading from and OLE DB Data Source (Part I) and how to load the data from a single flat file as fast as possible into a SQL Server table. But you probably noticed that “Out of the box” SSIS reads data faster from a flat file than from a SQL table. In this article I will share my latest trick on how to speed up the reading from a single table, typically at least twice as fast!

Time to beat, the “Native” OLE DB source speed

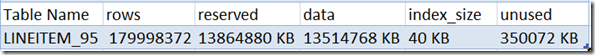

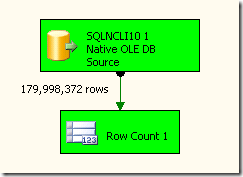

The first step, like always, is to check the default throughput and duration by counting rows from the Native OLE DB Data Source.

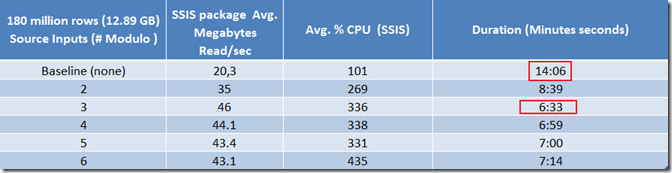

In our case it takes 14 minutes and 6 seconds to read all approx. 180 million rows or 12.89 GB from a SQL Server table with 16 columns of data. For comparison; reading the same data from a single flat file takes only 7 minutes 57 seconds.

So where is this difference coming from? Primarily because of the IO Packet size that’s being used I would say. SSIS reads with 128 KB blocks from a flat file and uses by default 4KB (the default network packet size) which is changeable into max. 32 KB to request data from SQL Server (32KB is also the maximum for the new SQL2008 R2 version). To retrieve the data faster we have to find a way to handle more IO requests in parallel!

To set the baseline just build a package and count the rows from the Native OLEDB Source. (Don’t forget to change the Packet Size from 0 into 32767 in the Connection Manager).

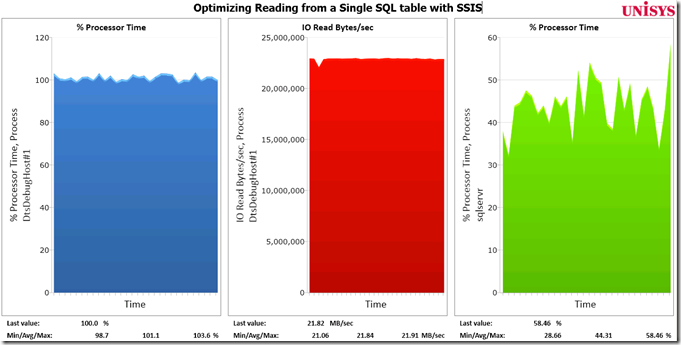

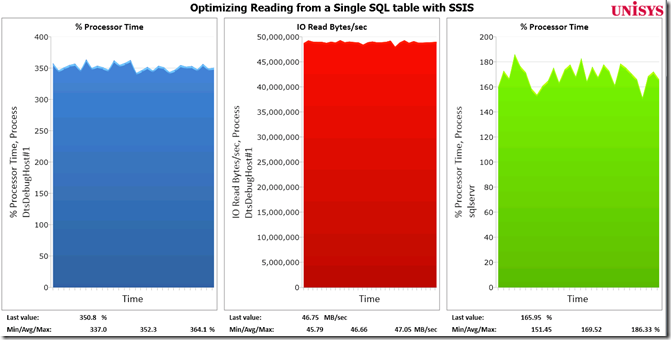

The Windows Performance Monitor counters to check are the CPU load of both the SSIS process (DtsDebughost when executing the package from within BIDS or DTEXEC when started from the command line) and the SQL Server process. Also check the amount of bytes we are reading: select the IO Read bytes/sec counter from the process.

Adding parallelism by using the Modulo algorithm

The optimization trick I would like to point out to you is based on using the Modulo arithmetic operator that can be used with the numeric data type. It returns the remainder of one number divided by another. For example, if you want to read with 3 parallel streams use can use modulo 3 ( % 3 ). You can pick up the 3 output streams by specifying as the remainder values = 0,1 and 2. (Using value’s less than 0 or larger than 2 will return 0 rows when modulo 3 is used.)

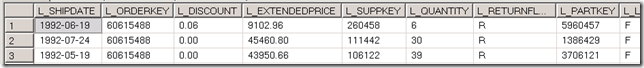

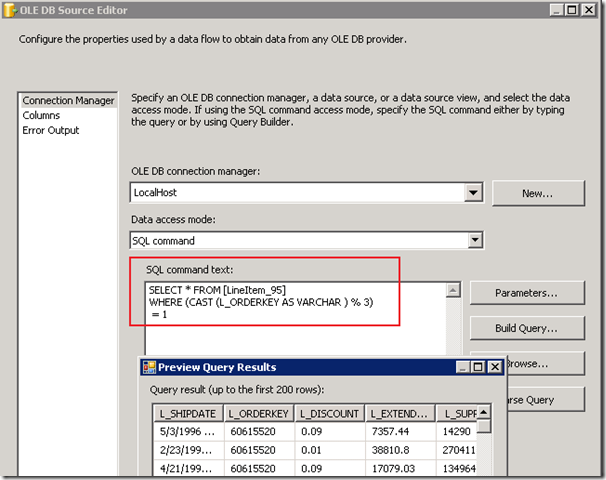

You can check the output also by running the query in SSMS or with the BIDS Preview option from the OLEDB Source Editor.

WHERE (L_ORDERKEY% 3) = 2

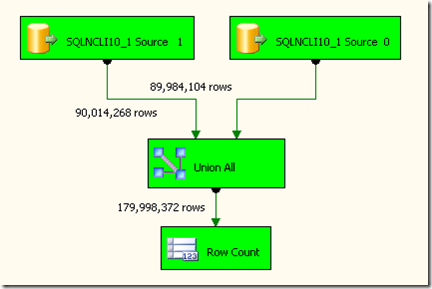

When you build this query into an SSIS package, you will notice that initially it does creates parallelism; the rows are quite nice divided and read from the two data sources, But unfortunately the total package execution time doesn’t come down, which is a bit strange. (After all, adding more sources is an optimization trick we discovered already long time ago, back in 2004 while testing an early Beta 2 release of SSIS).

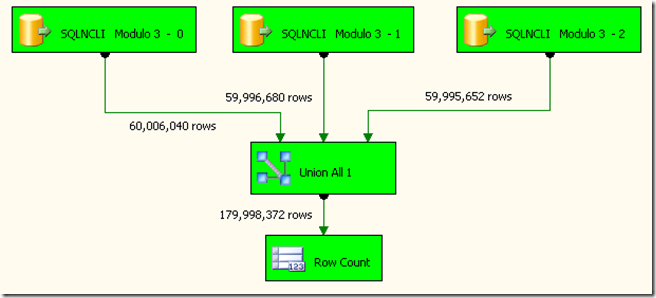

Specify in your package the multiple data sources and merge the result with the Union All component:

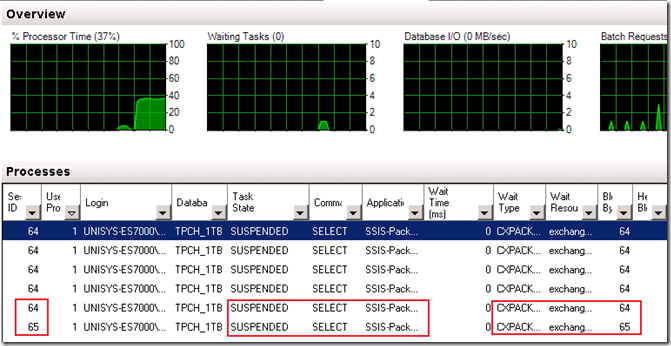

The Activity monitor shows us quickly that indeed multiple SPIDS are fired by the SSIS package but many are Suspended and actually causing unnecessary synchronization:

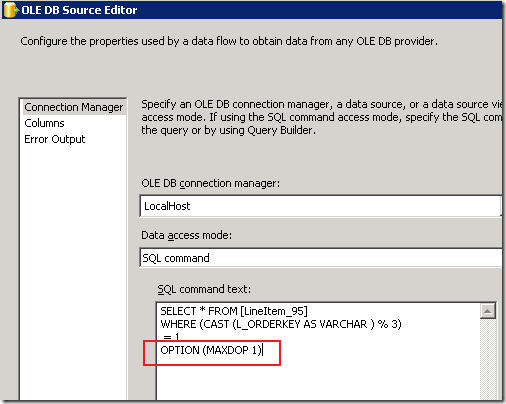

Luckily this can be resolved quickly by specifying the query hint “OPTION (MAXDOP 1)”. This removes the synchronization overhead without sacrificing on throughput in this case.

Reading multiple times from the same SQL table

Time to put it to the test and increase the number of Data source components in the package. Each OLE DB data source should point to the same input table, just modify the modulo factor and the output separator when adding more. The best result I typically achieve while reading from the same data source 3 or 4 times in parallel:

When we check the perfmon counters one more time, you’ll see the IO throughput actually went up from initially 21. MB/sec to 46.6 MB/sec on average, so it has more than doubled!

The CPU consumption of both the SSIS and SQLServer process have also increased ; SSIS from 100% to 350% (3.5 CPU) and SQLServer is using also an extra CPU.

Results

The overall package execution duration to read the same 180 million rows (12.89 GB) decreased from initially 14 minutes 6 seconds to only 6 minutes and 33 seconds when reading the data with 3 parallel streams!

Wrap Up

When you have to speed up reading large amounts of data from a single table in your SSIS package, you can decrease the duration by more than half by adding some form of parallelism into your package by using this Modulo trick. Typically reading data from the same SQL Server table with 3 or 4 parallel streams provides the best result!

20 Responses Leave a comment

top post

Can you explain why you use this WHERE clause:

WHERE (CAST (L_ORDERKEY AS VARCHAR ) % 3) = 2

Casting the column to varchar is pointless if you want to do a modulo operation on it, since it will have to do an implicit cast (back) to a numeric type. It may not impact the SSIS operations but it will likely slow the SQL Server portion.

Hi Rob,

the explanation is the title of this post, “to read as fast as possible” ;-) using the casting to varchar makes it just a little bit faster, using 10% extra CPU resources for the SQLServer process. But you’re right, it’s a bit confusing ; removing the casting from the query will also do trick:

SELECT * from dbo.LINEITEM_Hash96Key_SSD

WHERE ( L_ORDERKEY % 3) = 0

option (maxdop 1)

Hi Chris,

if you get only 1/3 of the data , It might be that you forgot to set the module function correct in each of the trees ? like:

select * from dbo.LINEITEM_Hash96Key_SSD WHERE (L_ORDERKEY% 3) = 0

select * from dbo.LINEITEM_Hash96Key_SSD WHERE (L_ORDERKEY% 3) = 1

select * from dbo.LINEITEM_Hash96Key_SSD WHERE (L_ORDERKEY% 3) = 2

(You say you are processing 50 mill rows from a staging db in 6 mins == 138K rows/sec; with an SSIS rowcount component you can check if the total row numbers do match the numbers of rows in your staging database. It will also tell you how fast you can read from your datasource and the effective MByte/sec. Maybe there’s some more room for optimizations).

— Henk

I’m new to parallelism so firgive me if my question is elementary. But I am confused on how the query hint OPTION(MAXDOP 1) would cause the spids to all run at same time. I’ve read that this “Suppresses parallel plan generation. The operation will be executed serially” (Found at http://stackoverflow.com/questions/163917/optionmaxdop-1-in-sql-server). That makes me think that OPTION(MAXDOP 1) only allows one CPU. Wouldn’t it be better to use all possible CPU’s?

Hi Dan,

the trick is that you start multiple, separate, sessions to sql server, each session is serving a portion of the data, and… each session (SPID) is indeed handled by just 1 CPU (SQL scheduler); by suppressing parallelism within each session you gain performance (as shown in the picture, otherwise the SPIDS have primairly status “suspended”, so not doing anything usefull)

However, when you read from a partitioned table, which is a multithreaded operation by nature, you will see that using a higher maxdop number may increase throughput.

(read more on this topic on: http://henkvandervalk.com/optimizing-sql-in-memory-table-scan-processing-speed, where reading from a partitioned table uses 88+ cores;-))

Hope this helps

Brgds,

Henk

So does the (MAXDOP 1) stop the cpu from working with other process at the same time and concentrate on the current query?

Dan, you cannot jump into that conclusion: with MAXDOP 1 the query will be handled by a single SQL Scheduler which uses a single CPU. However if there are multiple queries to serve, these will also be scheduled.

Henk, I have an ssis pkg where I have 29 tables I’m wanting to reload the way you’ve described above. I’m adding the trees one tree at a time and watching the preformance. The first tree ran by itself fine but when I’ve added another there are 6 session ids but only 3 are running, 1 says suspended and the other 2 have nothing in the status. I set the pkg property MaxConcurrentExecutables = 3 (Only have 2 DataFlows at the moment) and have set each dataflow’s EngineThread = 3 to handle the 3 data pipelines per dataflow. I’m running this in a job on a SQL Server 2005 box with 8 3.2 Gig processors and 64 Gig of Ram (Which I’ve never seen go above 50%, maybe the setting for amt of memory allocated for SQL Server needs to be adjusted). Looking at the Activity Monitor my processor time pretty much stays in the 30% to 50% range which drives me mad thinking that there’s plenty of processor time available but my sessions are in suspended state. Any idea what I’m doing wrong?

Henk, Great suggestions, particularly like the modulus operator to split into exactly how many parallel streams one wants to use.

I’ve been using a sequence container with multiple datasources inside it to have it run separate threads for parallel loads (from remote Oracle source) instead of your method above with the union all and maxdop. Do you know if there is any disadvantage to using that method (does it cause cxpacket sync waits?) It seems to work correctly, and I’ll try to run some tests but just in case you tried this and knew.

thanks,

hi Steve,

if you are reading from different tables using multiple datasources is perfectly fine. From what I’ve seen on customer sites, typically reading from oracle tables is a bit slower than the actual writing into a SQLServer table ; you can check this yourselves by using the Rowcount component trick to check how fast you can actually read with just the datasources. (are you using separate downloadable Attunity Microsoft Connectors for Oracle from the Microsoft Download Center? ) If needed, you can write out the data into multiple SQL Server partitions/tables using the modulo trick. (Please don’t forget to also check with Taskmanager the network throughput numbers:-) )

Let me know your results!

good luck

Henk

pingback: http://sqlserverstuff.wordpress.com/2011/10/26/moving-large-amounts-of-data/

Hi Henk

do you think that the “OFFSET and FETCH” will help in this example?

I assume it will, basically it wil play the role of “WHERE (L_ORDERKEY% 3) = 2”.

I havent tested it yet.

Hi Henk

I did what optimization and loaded a 10 GB CSV file in 7m51s on a Fast Track. What do you think?

Cheers

Roger

Hi Roger,

10GB / 471 Seconds == 21.7 MB/sec ;

this looks like a single Bulk Insert task only? Is this throughput / time taken acceptable for you ?

If not, have you tried some options to create parallelism ( with SSIS and the modulo trick, or load multiple smaller files in parallel ?)

Brgds,

Henk

Hey Henk,

This works great. So glad I found this page. I have been using MAXDOP 1 on all my large table pulls for years. Recently, I started a job where there are quite a few legacy DTS packages that do massive table to table transfers for ETL processes.

Splitting the tables using the %3=(0-2) worked great. I am even tempted to split them out more to see how many times I can split my source before my netapp bricks itself.

Well done sir.

I run some tests of data extraction with the method presented here. I checked it on smaller table 1.5 GB (12 mln rows) on my virtual machine with 2 procesors. The ssis package consists only from ole db data sources, 1 union all and 1 multicast componets. In properties MaximumErrorCount set to 4 (I have only 2 processors) and EngineThreads to default 10. In standard scenario with only 1 data source the ssis pulled data in 30sec. I got the best result with only 2 pararell data sources with time 25sec. I can’t go to 50% of performance improvment. Have I missed something or this solution works great only for realy big tables?

Hi Kamil,

(1.5 GB/ 25 seconds == 60 MB/sec; this is close to the limit of a 1Gbit network connection; are you using iSCSI for your VM’s)?

Just doublecheck the maximum source speed by reading from the source table with the modulo option (%2 for 2 cpu’s) and maxdop 1 with the rowcount as destination (as described in my blog).

this will tell you how fast you can read. If you can read much faster than 60 MB/sec than this means you have to optimize some other portions of the package!

-Henk

Hi Henk,

Any idea for non numeric key fields where modulo is not applicable.

Hi Gowri, that is indeed a tricky one.. would you have the option to add an extra column? (Either as part of your source data or generate one on the fly in SSIS; in the second please also add / doublecheck the total number of rows read..)