Speeding Up SSIS Bulk Inserts into SQL Server

Since we set the ETL World Record with the introduction of SQL2008, a lot of insights, tips & tricks have been published on what it takes to load data fast into SQL (see also the Data loading Performance Guide). The trick described below is one to remember if you are being challenged to load a single flat file as fast as possible into SQL Server.

Challenge: Loading the data from a single large file as fast as possible into a table

The fastest way to do this is to build an SSIS package to handle the pre-processing of the data type conversions so this doesn’t have to be done on the SQL Server side, saving precious CPU ticks and more important – latency. Next thing is to run this SSIS package on the SQL Server itself and use a fast, low latency, in-memory connection to eliminate the network stack overhead.

Step 1) Check the speed of your data source

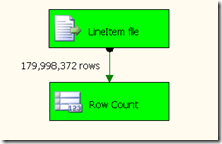

As always, start with checking the throughput of the data source by using a Row Count component (see picture). Select the input columns to insert (see also Speeding up reading from a data source). Out of the box it takes 8 minutes and 6 seconds to read the Full 22.7 GB of data from the flat file with 16 columns of data.

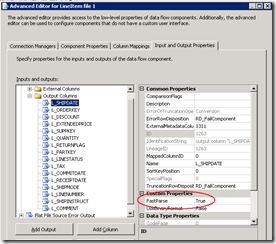

By enabling the Fast Parse option on the appropriate Output columns it takes only 5 minutes 53 seconds to read the entire file, which is 13.7 % faster (or 2 minutes 13 seconds less).

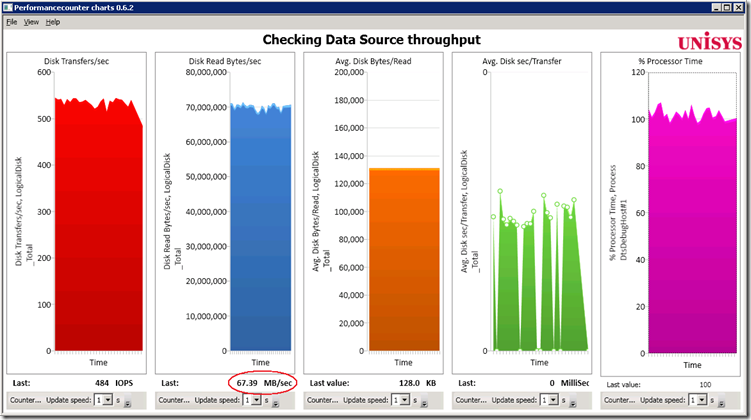

The Perfmon counters show we read from the file with about 70 MBytes/sec, 128 KB blocks and it takes approx. 1 CPU and “0” milliseconds per transfer to read from Solid State:

Step 2) Determine the native bulk Insert speed

First apply a couple of optimizations to the SSIS package to speed up the Bulk Insert;

(- Use the Fast Parse option)

– Use the SQL Server Native Client 10.x OLE DB provider for an In-Memory, high performance connection

– Set the Packet Size to 32767

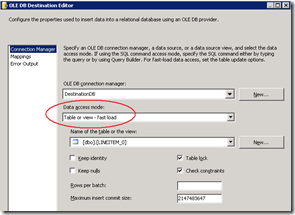

– Select the OLE DB Destination Data Access mode “Table or View – fast load” option (see picture ):

Bulk Insert Result: It takes 19 minutes 55 seconds to insert 22.7 GByte of data , or almost 180 million rows.

This is an average 19 MByte/sec or 150000 rows/sec.

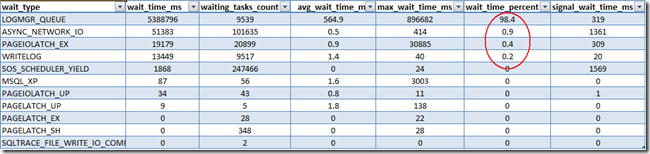

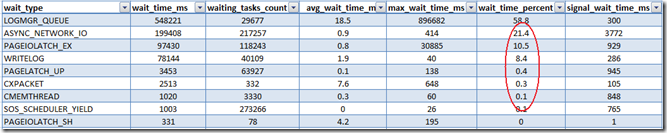

According the Waitstats the execution of this task seems pretty efficient:

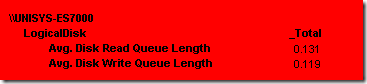

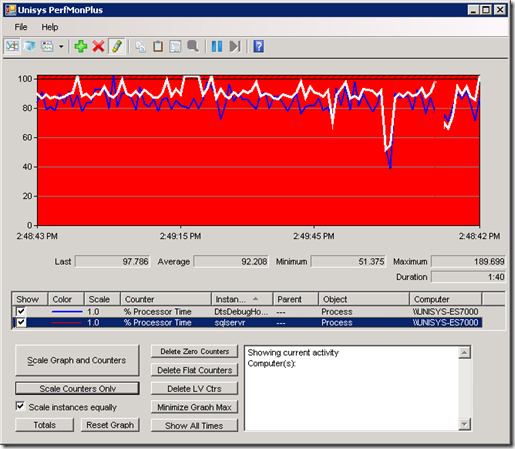

During the validation of the source throughput we measured an effective Read speed which was 3.5 times faster than the current throughput we are getting with Bulk Inserting. It’s good to know that the reader isn’t our primary bottleneck. Looking at some other basic perfmon counters, like the disk write queue length and the CPU load of both the SQLServer and the DTSDebughost (BIDS) process don’t show any significant bottleneck; both the processes use less than a CPU each.

Optimizing the Bulk Insert

To increase the overall throughput we have to build some parallelism into the data flow. SSIS will allow us to do just that! Let’s find a way to spread the load and bulk insert the data from the same flat file into 4 different destination tables, instead of just one, and find out if we can get the throughput up !

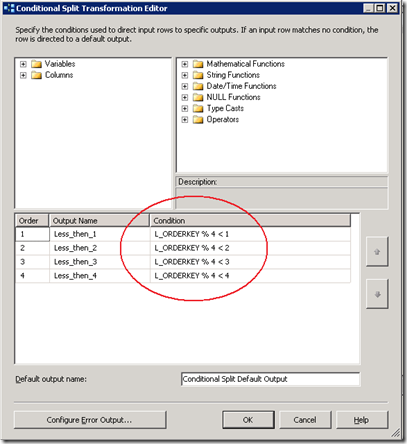

Step 3) Adding a conditional Split to the SSIS Package

Since we can read 3.5 times faster from our data source than we are writing it out to SQL Server, we should spread the load across at least 4 streams. In SSIS you can build this into a package with the Modulo function (%). The Modulo function provides the integer remainder after dividing the first numeric expression by the second one, the ultimate striping mechanism!

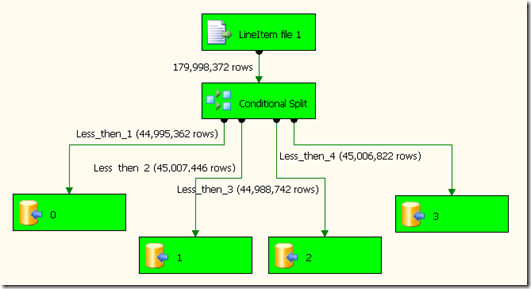

Use the 4 outputs to feed 4 different table destinations:

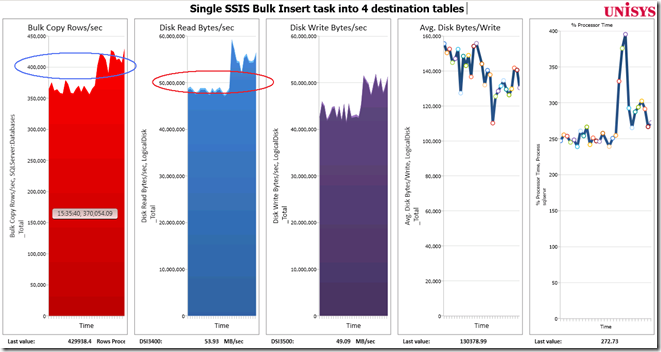

Result: Magic! the package completed loading the same 22.7 GByte in only 39% of the time: 7 minutes 47 seconds ! (versus 19 min. 55 sec.)

That’s at least 2.5 times faster !

The SQL Waitstats show some different figures also;

Unfortunately, I think there isn’t much we can do about either the “ASYNC_SYNC_NETWORK_IO”; we are using the max. 32 KB packet size and an in memory connection. Also the “PAGEIOLATCH_EX” (caused by an exclusive lock on the buffer while the page is transferred from “disk” to cache) is a tricky one; I tried to reduce the PAGEIOLATCH_EX by adding a second flat file data source that reads from the same input file, each processing 2 tables, but no, I can’t get it any faster. Think we have to live with the 2.5+ times faster for now!

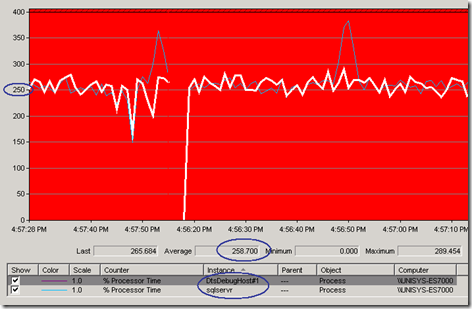

Both the SQL Server process and BIDS use on average more than 250% Processor time, or 2.5 CPU’s each to service this optimized SSIS Package.

Step 4) Consolidation

Create either a View across the 4 tables or build a partitioned table that allows you to switch the 4 tables back in into a single large table if that’s needed.

Wrap-Up

When you have to load the data from a single flat file as quickly as possible into SQL Server, the technique described above will bring you a significant increase in Bulk Insert throughput and a decrease in execution time needed. Also start the SSIS package on the SQL Server, use a solid State disk as staging area for the flat file and stripe the data into multiple tables. The example above showed we can load the same data file at least 2.5x times faster!

26 Responses Leave a comment

One of the best and clearly laid out post in a while. Thanks for sharing.

Very useful post, Henk. I am going to bookmark it for future use.

I am a 1,000,000,000 (billion) percent sure that is and will be the best 2010 article that I have seen for SSIS

Thank you Henk

Hi Henk,

I’m betting that the ASYNC_NETWORK_IO waits are occurring on the components doing the bulk load, as they wait for more rows. This may indicate that you actually have too many destinations. But you’d need to check out the wait stats in real time while the load is running to verify this. You can use my Who is Active tool for that if you’re in the mood:

http://tinyurl.com/WhoIsActive

Best,

Adam Machanic

Shouldn’t those mudulo functions be L_ORDERKEY%4=0, L_ORDERKEY%4=1 etc? With the < function you'll be including cases where the modulus=0,1,and 2 where you're selecting L_ORDERKEY%4<3

Hi Scott,

you can use both ( see also http://henkvandervalk.com/reading-as-fast-as-possible-from-a-table-with-ssis-part-ii where I use the ‘=’ );

indeed, when using the < in your SSIS package you have to make sure you set the ordering right ; start with L_ORDERKEY%4< 1 as the first one in the list followed by L_ORDERKEY%4< 2 etc.

(let me know the throughput gain you find with the trick ;-))

— Henk

What about using a RAM drive instead of an SSD?

Hi Andrew, good catch ! :-)

yes, I worked with Ram drives in the past but the quality/read throughput wasn’t that impressive at the end, especially when reading multiple files in parallel.

(I did found that NTFS formatting a ram drive with 64 KB blocks instead of 4KB brings a huge performance boost!) Please share your test results!

Was wondering if it was possible to use the SSIS package to load into multiple tables from a single csv file and if you have come across any examples which could be helpful to me in implementing it.

Would appreciate any pointers.

Thanks.

Hi , yes thats possible !

I have written a blogpost on this topic some time ago: please check out :

http://henkvandervalk.com/speeding-up-ssis-bulk-inserts-into-sql-server

Hi , yes thats possible !

I have written a blogpost on this topic some time ago: please check out :

http://henkvandervalk.com/speeding-up-ssis-bulk-inserts-into-sql-server

Henk, Thanks a lot for this highly informative post.

I am going to use this technique in my project. I have a question regarding consolidation of split tables. Question is that when we would consolidate tables, would data be in same sequence, in table, as in source flat file?

hi Spica,

if you switch in the partitiona into a single table the data will remain in their partitions; you can benefit from this;

(a good article to read might be : http://msdn.microsoft.com/en-US/library/ms345146(v=SQL.90).aspx#sql2k5parti_topic6)

(you can also use an ORDER BY when reading the data from the table)

Very Nice and useful post. Thanks for posting

Hey,

Great Post indeed. I have have somewhat different scenario where I have two flat files and one destination table. catch here is I can not split this table. What do you think will be the best method to load?

Thanks,

Nimesh

Hi Nimesh,

fastest way to load 2 flatfile into a single destination table would be to load each flatfile into a separate table and partition switch them in afterwards.

-Henk

Result: Magic! the package completed loading the same 22.7 GByte in only 39% of the time: 7 minutes 47 seconds ! (versus 19 min. 55 sec.)

I have loaded 15.8 million using this approach (4 destination tables). It took 11 minutes. But without 4 destination (1 destination) it took 19 minutes. But I need actually one table. I attempt to load the data in 4 partitions. Then tried to merge them.

ALTER PARTITION FUNCTION PFTableMerge() MERGE RANGE (1);

ALTER PARTITION FUNCTION PFTableMerge() MERGE RANGE (2);

ALTER PARTITION FUNCTION PFTableMerge() MERGE RANGE (3);

ALTER PARTITION FUNCTION PFTableMerge() MERGE RANGE (4);

Unfortunately it took more than 30 minutes to complete. With 2 core (16 logical processor) with 48 GB of memory. Am I missing something?

hi, please use the switch Partition option instead, like:

ALTER TABLE CUSTOMER_3 SWITCH TO CUSTOMER PARTITION 3

ALTER TABLE CUSTOMER_4 SWITCH TO CUSTOMER PARTITION 4

Hi, how would you load a lrage text file running in GB into a table on server

which is having less memory. for eg loading a 20GB text file into a table

on a server having 4gb of memory using SSIS.

Hi Sid, just start and load it! SQLServer will handle it for you.

hi Henk,

what is the benefit of using views for the same table in destination?? as you mentioned in section 4 (Consolidation) of this article.

Thanks,

Zaim Raza

Hi Zaim, the result of step 3) is inserted data that is stored in 4 different tables. To turn this into 1 logical representation of the data you can use a view to consolidate, or better switch partitions in into a single table.

Very nice/easy to follow this Henk. Will this logic work if we have thousands of flat files? to load into a single table? Please advise.

hi Sashi, for many files I would recommend to keep things simple and load as many files in parallel as the number of cores you have in your system. Loading data via SSIS is typical the fastest since you can leverage SSIS to do some preprocessing so that SQLServer just has to write out the data.

I have a flat file having 800k data..Normally it is taking too much time to insert the data. Need to create a package using parallel processing so that we could insert the data from flat file easily and in very less amount of time.

hope it helped to reduce the overall time taken Ranjay!