SQL under the hood (Part 2): Estimate the SQL Disk IO Workload based on the Virtual File Stats DMV.

Do you know how much data is being written and read on a daily basis by your SQL Server databases? SQL Server keeps track of these stats for you already; you just have to retrieve and interpret them correctly so you can turn this data into valuable information.

A couple of years ago I was looking for a way to quickly quantify the overall SQL workload of a server that I never had seen before and noticed the beauty of the sys.dm_io_virtual_file_stats DMV. This DMV returns the I/O statistics for each SQL database; for both the data- and the log files collected since an SQL Server instance has been started. You can take a rough ‘guestimate’ about how much data physically is being touched and processed by you disk subsystem if you divide these numbers by the uptime of the SQL instance and turn them into a metric you prefer; bytes per second or Gigabytes processed per day.

The VFS Query

Every step in the query below is documented and self-explanatory; it just queries sys.databases to figure out when the SQL instance started, the sys.dm_io_virtual_file_stats DMV.

-------------------------------------------------------------------------------------------- -- Script to snapshot the SQL SMP Virtual Filestats and calculate and average per day -- based on the SQL instance uptime (calc. in seconds) -- V1.1 by Henk van der Valk Script provided 'as-is' -------------------------------------------------------------------------------------------- USE master go SET NOCOUNT ON DECLARE @crDate DateTime; DECLARE @hours DECIMAL(18,3), @Days int; DECLARE @FinalHours int, @FinalMinutes int, @FinalSeconds int, @total_seconds int; -- Determine uptime by checking Tempdb creation datetime: SELECT top 1 @crdate=create_date FROM sys.databases WHERE NAME='tempdb' SELECT @hours = DATEDIFF(ss,@crDate,GETDATE())/CAST(60 AS Decimal)/CAST(60 AS Decimal); PRINT 'SQL Server instance '+ @@SERVERNAME + '\' + @@SERVICENAME + ' is Up since: ' + CAST (@crdate as varchar) ; -- From hours to days: SELECT @Days = @hours/CAST(24 AS Decimal); -- Determine the remaining part of the hours: SELECT @FinalHours = @hours - (@Days*CAST(24 AS Decimal)) -- Remaining minutes: SELECT @FinalMinutes = (@hours - (@Days*CAST(24 AS Decimal)) - @FinalHours ) * 60; -- Remaining seconds: SELECT @FinalSeconds = (((@hours - (@Days*CAST(24 AS Decimal)) - @FinalHours ) * 60) - @Finalminutes) * 60; PRINT 'Or: '+ CAST(@Days as varchar) + ' Days, ' + CAST(@FinalHours as varchar) + ' Hours,' + CAST(@FinalMinutes as varchar) + ' Minutes and ' + CAST(@FinalSeconds as varchar) + ' Seconds.' SELECT @total_seconds = (CAST(@Days AS decimal(12,2))*24*3600 + CAST(@Finalhours AS decimal(12,2))*3600 + CAST(@Finalminutes AS decimal(12,2))*60 ) + CAST(@Finalseconds AS decimal(12,2)) PRINT 'Total uptime in seconds: '+ CONVERT(VARCHAR(20) ,@total_seconds ) SELECT @@SERVERNAME as Hostname, @@SERVICENAME as Instancename, @crdate AS SQL_Start_Date_Time , @total_seconds as TotalSeconds_Up SELECT DB_NAME(database_id) AS [Database Name] , file_id , io_stall_read_ms , num_of_reads , (num_of_bytes_read / 1024 / 1024 /1024) as GB_Read_Total, num_of_bytes_read / @total_seconds * 3600 * 24 /1024/1024/1024 as AVG_GB_read_per_day_ESTIMATE, CAST(io_stall_read_ms / ( 1.0 + num_of_reads ) AS NUMERIC(10, 1)) AS [avg_read_stall_ms] , io_stall_write_ms , num_of_writes , num_of_bytes_written / 1024 / 1024/1024 as GB_Written_Total, num_of_bytes_written /@total_seconds * 3600 * 24 /1024/1024/1024 as AVG_GB_Written_per_day_ESTIMATE, CAST(io_stall_write_ms / ( 1.0 + num_of_writes ) AS NUMERIC(10, 1)) AS [avg_write_stall_ms] , io_stall_read_ms + io_stall_write_ms AS [IO_Stalls] , num_of_reads + num_of_writes AS [Total_IO] , CAST(( io_stall_read_ms + io_stall_write_ms ) / ( 1.0 + num_of_reads + num_of_writes) AS NUMERIC(10,1)) AS [AVG_IO_stall_ms] FROM sys.dm_io_virtual_file_stats(NULL, NULL) --ORDER BY avg_io_stall_ms DESC ; order by GB_read_total DESC

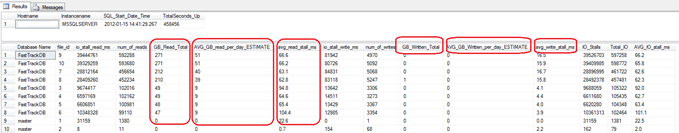

Sample VFS Query output

The output example below shows the regular VFS output with some additional columns to quantify the workload; for each database file it shows the Gigabytes_Read_Total since start, the Average_Gigabytes_Read_per_Day, assuming a 24×7 operation, the average_read_stall_milliseconds that users have waited for reads issued on a file, and similar columns for the write stats.

Don’t be surprised when the tempdb traffic is by far the largest Disk IO consumer or when an unbalance between database files and stall timings show. This could be a good place to start monitoring the effective IO response timings of the LUNS you have available and if they cope well or that you are suffering from some hotspots.

Wrap up

The information provided by the sys.dm_io_virtual_file_stats DMV will help you to quantify the daily Disk IO workload of your SQL Servers. When you store the results, over time, it will also show you if the overall usage of your SQL environment increases.

I’ve seen VFS stats of production databases who are reading on average 6-19 Terabyte/day. When you update more than 1 TB+/day on your main production databases, please shoot me a mail ; your workload very likely qualifies for SQL Server Parallel Data Warehouse!

2 Responses Leave a comment

Love the script and ran it on one of our production servers which hosts about 1,300 databases. Our top DB is at 2.4TB writes and about 1.3TB reads a day. Most of the other DB’s are around 200-700GB a day for reads and writes so total is huge!

Thanks for sharing Richard! Are the R/W IO response timings ok or are there some crazy numbers in there?