Many of the performance problems I have seen over the years where combinations of both hardware and software; some of them where very simple to detect and figure out and some have taken some time to resolve… There’s a very common one that you can detect easily during a coffee break , just by looking at the Windows Task Manager of the server that you are working on… Many of the server in your company are probably “out of the box” configurations which have never been thoroughly checked for both maximum disk and network throughput, or simply because of tight policies the network properties aren’t available to you.

Use Task Manager to display the network throughput

The Windows Task Manager is general available to you and can be expanded quickly to display the effective real-time network throughput ;

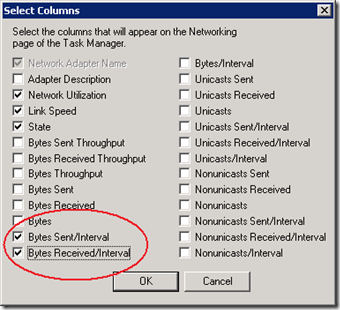

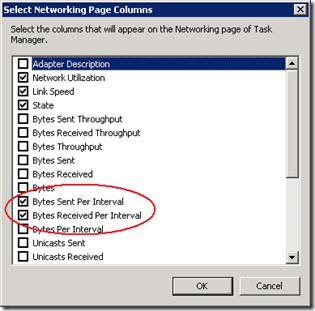

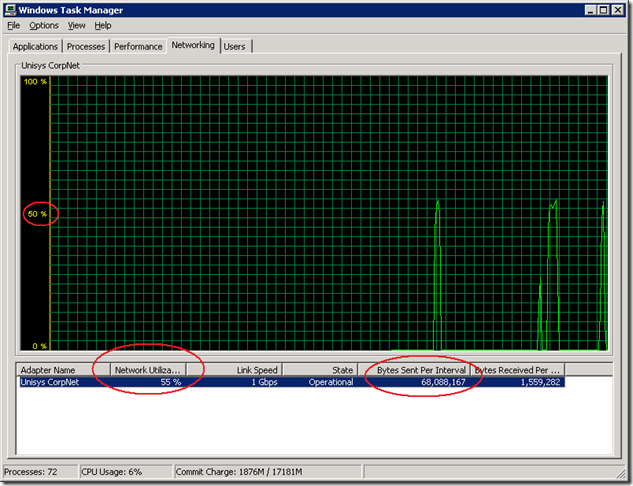

Open the Windows Task Manager, select the Networking tab, select View from the menu and select both the columns, “Bytes Sent/Interval” + “Bytes Received/Interval”

which by default are set to a 1 second refresh rate:

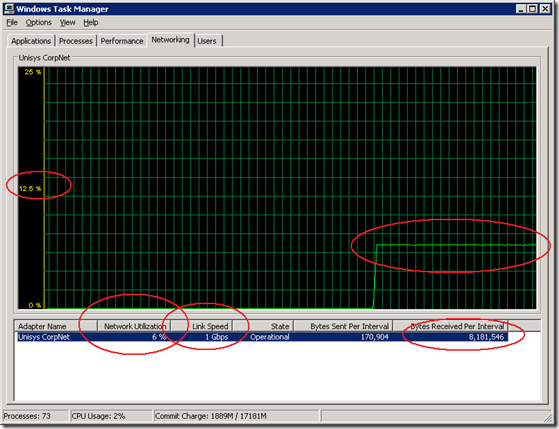

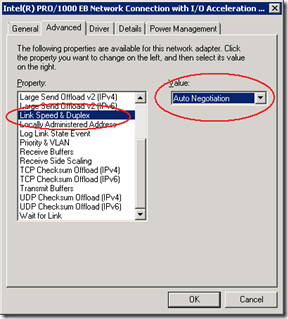

So far probably nothing new under the sun… but here it comes; a 1Gbit network interface might show up as connected using a 1 Gbps Link Speed; in many cases (I’ve seen it at least a dozen times…) the effective throughput is way less because of either a network adapter is using a fallback rate , or it was negotiated by a switch as part of the “Auto Negotiate” handshaking, , or the network is patched into an old 100 Mbit switch, or simply because in the installation manual is stated that the link speed should be set to 100mbit Full Duplex…

Coffee or Tea

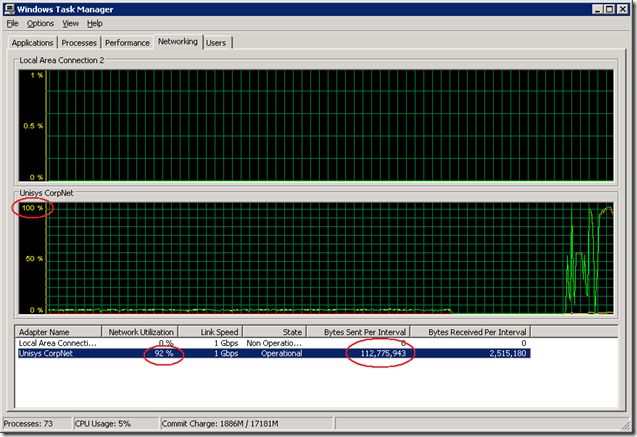

With a fresh cup of coffee or tea just have a look at the overall utilization and throughput of the network. If the utilization is stuck around 12.5% you should now what time it is; you are looking at an network interface that is limited to effective 100Mbit/sec which has a max. throughput of 8 or 13 MByte/sec and this shows in Task Manager also as a Flatliner.

If you hear network folks talk about 1Gbit switch network utilization rates of max. 12.5% after they upgraded the backbone switches 6 months ago, it’s time to start smiling :-)

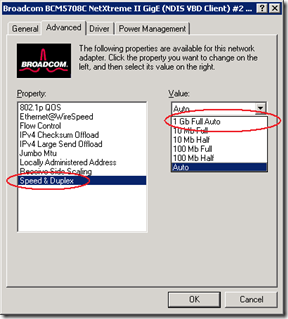

Some default Intel and Broadcom Network card settings will look similar to these:

How to test the throughput of a network interface quickly

To check the maximum throughput of a network interface yourself, a simple quick & dirty check is just copy some large files around, a bit more work is using “scientific approach” and use a tool like NTttcp. (see also the network tuning tips from our ETL WR article: Initial Tuning of the Network Configuration with NTttcp and http://www.microsoft.com/whdc/device/network/TCP_tool.mspx)

1) Map a network drive to the destination location based on TCP IP address (on the command line type NET USE * \\192.168.168.10\c$ as an example address)

2) Copy a large file (like a SQL Server installation file) from a couple of 100’s of Megabytes to the destination you just mapped;

If you start a single file copy with the Windows Explorer, you will see typically a throughput somewhere between 30-68 MByte/sec. As you can see, it’s much faster and not a clean steady flat throughput;

Jumbo frames

Check with you network folks if the network switches in your company support Jumbo frames; if so it’s worth it to see if any recurring tasks like the overnight data loading can benefit from it; with support enabled for large Jumbo frames of 9000 , 9014 or even 16128 Bytes (versus the default , typically 1500 bytes for Ethernet), the throughput will go up to even over 100 MByte/sec per Gbit link!

Wrap Up

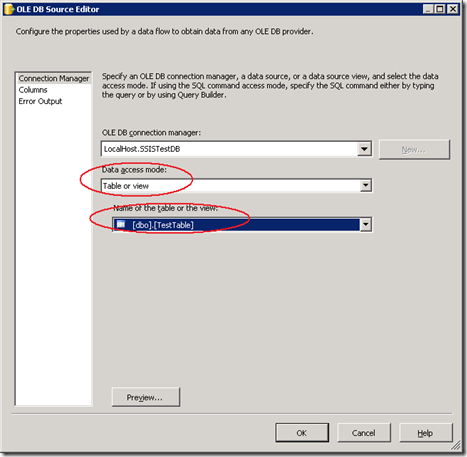

Make it a habit to select the Bytes Sent + Received /Interval in Task Manager and have a quick look at the throughput… watch out for the 12.5% utilization and flat liners, indicating a bottleneck. If you run, for example SSIS data loading tasks from a different server than your database, you can get a significant higher throughput if you tune the network stack by either using Jumbo Frames. If you speeded up one of your servers because of this tip, just leave a small comment ;-) !