How-to load data fast into SQL Server 2016

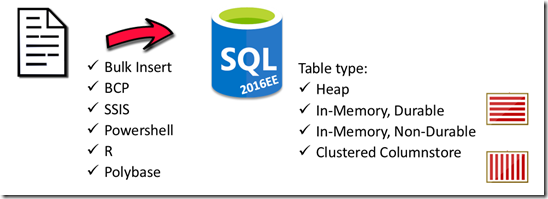

What will be the absolute fastest way to load data from a flatfile into a table within SQL Server 2016? A lot has changed since my initial post on this topic many years ago, ike the introduction of In-memory optimized tables and Updateable Columnstore table indexes. Also the list of data transport vehicles to choose from is growing: besides BCP, the T-SQL Bulk Insert command, SSIS as ETL tool and PowerShell there are some new ones added, like PolyBase, External R Script or ADF. In this post I will start with the checking how much faster the new durable & non-durable In-memory tables are!

Setting the Baseline

For these tests I’m using an Azure DS4_V2 Standard VM with 8 cores/28 GB of RAM and 2 HDD Volumes with host caching R/W enabled. (Both Luns provide ~275 MB/sec R/W throughput although the GUI states a limit of 60MB/sec). I generated a single ~60 Million row/7.2 Gigabyte TPCH lineitem flat file as data to load.

As baseline to for use for comparison we will use the time it takes to load the file into a Heap table:

BULK INSERT [LINEITEM_HEAP] FROM 'F:\TPCH\lineitem.tbl' WITH ( FIELDTERMINATOR = '|', ROWTERMINATOR = '\n' );

This regular Bulk Insert command completes within 7 minutes with an average of 143K rows/sec.

Enabling the test database for Memory optimized tables

The (in SQL2014/2016 Enterprise & developer Edition ) introduced in-memory tables are designed for very fast OLTP with many small transactions and high concurrency, which is a completely different type of workload as bulk inserting… but, just out of curiosity…lets give it a try!

There are 2 types of in-memory tables: durable and non-durable tables. The durable ones will persist data on disk, the non-durable ones won’t. To enable this option we have to do some housekeeping and assign a fast disk volume for hosting these files.

First, alter the database to enable the ‘Contains MEMORY_OPTIMIZED_DATA’ option followed by adding a File location and Filegroup that will contain the Memory-Optimized tables:

ALTER DATABASE [TicketReservations] ADD FILEGROUP [memory_optimized_filegroup_0] CONTAINS MEMORY_OPTIMIZED_DATA GO ALTER DATABASE [TicketReservations] ADD FILE ( NAME = N'memory_optimized_file', FILENAME = N'E:\data\memory_optimized_files' ) TO FILEGROUP [memory_optimized_filegroup_0] GO

The third thing to do is to add a separate memory pool to the SQL Server instance so it can keep all the data we will load into in-memory tables separate from its default memory pool:

Binding a database to a memory pool

The steps to define a separate memory pool and to bind a database to it are listed below:

Extra memory pools are managed via the SQL Resource Governor.

CREATE RESOURCE POOL [PoolHk] WITH( min_cpu_percent=0, max_cpu_percent=100, min_memory_percent=0, max_memory_percent=70, AFFINITY SCHEDULER = AUTO ) -- Dynamically change the value of MAX_MEMORY_PERCENT ALTER RESOURCE POOL PoolHk WITH (MAX_MEMORY_PERCENT = 80 ) -- RECONFIGURE enables resource governor ALTER RESOURCE GOVERNOR RECONFIGURE ALTER RESOURCE GOVERNOR RESET STATISTICS

The 4th and last step is to bind the test database to the new memory Pool with the sys.sp_xtp_bind_db_resource_pool command. In order for the binding to become effective we have to take the database offline and bring it back online. Once bound we can dynamically change the amount of memory assigned to its pool via the ‘ALTER RESOURCE POOL PoolHk WITH (MAX_MEMORY_PERCENT = 80 )’ command.

-- BIND the Database to the Pool exec sys.sp_xtp_bind_db_resource_pool N'TicketReservations', N'PoolHk'; -- take DB offline: ALTER DATABASE [TicketReservations] SET OFFLINE WITH ROLLBACK IMMEDIATE -- Bring the Database back Online: ALTER DATABASE [TicketReservations] SET ONLINE -- Deleting the Resource Pool: -- sys.sp_xtp_unbind_db_resource_pool N'TicketReservations' -- DROP RESOURCE POOL [PoolHk]

Bulk Insert into Durable In-Memory table

Now we are all set with the In-memory option enabled, we can create an in-memory table. Every memory-optimized table must have at least one index (either a Range- or Hash index ) which are completely (re-)composed in memory and are never stored on disk.

A durable table must have a declared primary key, which could then be supported by the required index. To support a primary key I added an extra row_number ROWID1 column to the table:

----- Durable table: CREATE TABLE [dbo].[LINEITEM_MO_Final] ( [ROWID1] [bigint] NOT NULL, [L_SHIPDATE] [smalldatetime] NOT NULL, .. [L_COMMENT] [varchar](44) COLLATE Latin1_General_100_BIN2 NOT NULL, constraint RowID_key primary key nonclustered hash (ROWID1) WITH (bucket_count=10000000) ) WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA)

Specifying a batch size of 1 (up to 5) Million rows to the bulk insert command helps to persist data to disk while the bulk insert is ongoing (instead of saving it all at the end); doing so minimizes memory pressure on the memory pool PookHK we created.

------------------------------------------------------ -- Bulk insert into Durable Memory Optimized table -- ------------------------------------------------------ BULK INSERT [LINEITEM_Memory_Optimized_D] FROM 'F:\TPCH\lineitem_export.csv' WITH ( FIELDTERMINATOR = '|', ROWTERMINATOR = '\n', Batchsize = 5000000 );

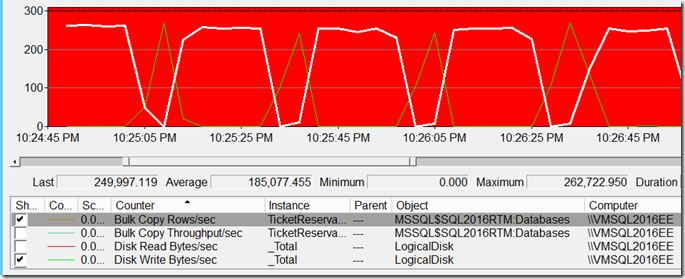

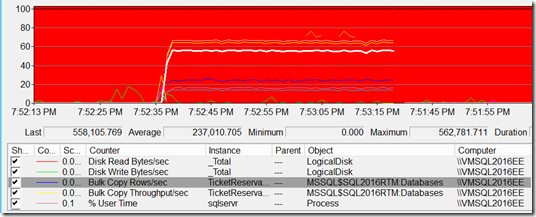

The data load into the durable In-Memory table completes in 5 minutes 28 seconds, or 183K Rows/sec. That’s an ‘okay’ time but not that much faster than our baseline. Looking at the sys.dm_os_wait_stats shows that the no.1 waitstat is ‘IMPPROV_IOWAIT’ which occurs when SQL Server waits for a bulk load I/O to finish. Looking at the Performance counter ‘Bulk Copy Rows/sec and Disk Write Bytes/sec shows the flushing to disk spikes of 275 MB/sec once a batch got in (the green spikes). That is the maximum of what the disk can deliver but doesn’t explain it all. Given the minor gain, we will park this one for future investigation.

Monitoring the Memory Pool

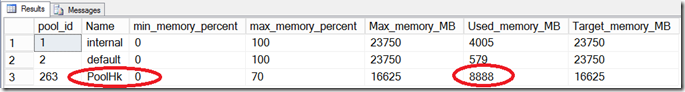

Via the ‘sys.dm_resource_governor_resource_pools’ dmv can we check if our in-memory table leverages the newly created ‘PoolHK’ memory Pool:

SELECT pool_id , Name , min_memory_percent , max_memory_percent , max_memory_kb/1024 AS Max_memory_MB , used_memory_kb/1024 AS Used_memory_MB , target_memory_kb/1024 AS Target_memory_MB FROM sys.dm_resource_governor_resource_pools

The output shows this is the case; the 7.2GB (+ some extra for the Rowid) got uncompressed loaded into the memory poolHk pool:

If you try to load more data than you have memory available to the pool you will get an proper message like this one:

The statement has been terminated.

Msg 701, Level 17, State 103, Line 5

There is insufficient system memory in resource pool ‘PookHK’ to run this query.

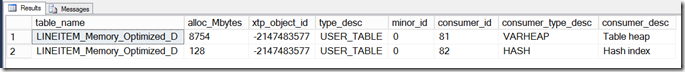

To look one level deeper at memory space allocation on a per In-memory table basis you can run the following query (taken from the SQL Server In-Memory OLTP Internals for SQL Server 2016 document):

USE TicketReservations

SELECT object_name(c.object_id) AS table_name, allocated_bytes /1024/1024 as alloc_Mbytes , a.xtp_object_id, a.type_desc, minor_id, memory_consumer_id as consumer_id, memory_consumer_type_desc as consumer_type_desc, memory_consumer_desc as consumer_desc FROM sys.memory_optimized_tables_internal_attributes a JOIN sys.dm_db_xtp_memory_consumers c ON a.object_id = c.object_id and a.xtp_object_id = c.xtp_object_id LEFT JOIN sys.indexes i ON c.object_id = i.object_id AND c.index_id = i.index_id WHERE c.object_id = object_id('dbo.LINEITEM_Memory_Optimized_D') ORDER BY allocated_bytes DESC

The data we just loaded is stored as a varheap structure with a hash index:

So far so good! Now lets move on and check out how staging in a non-durable table performs!

----- Non-Durable table:

if object_id('LINEITEM_Memory_Optimized_ND') is not null drop TABLE [dbo].[LINEITEM_Memory_Optimized_ND] go CREATE TABLE [dbo].[LINEITEM_Memory_Optimized_ND] ( [L_SHIPDATE] [smalldatetime] NOT NULL, [L_ORDERKEY] [bigint] NOT NULL, ..

[L_COMMENT] [varchar](44) COLLATE Latin1_General_100_BIN2 NOT NULL, ,INDEX [Staging_index] NONCLUSTERED HASH ([L_SHIPDATE]) WITH ( BUCKET_COUNT = 2048576) ) WITH ( MEMORY_OPTIMIZED = ON , DURABILITY = SCHEMA_ONLY )

Bulk Insert into Non-Durable In-Memory table

For IMND tables we do not need a Primary key so we just add and Non-clustered Hash index and set DURABILITY = SCHEMA_ONLY. The Bulk insert Data loading into the non-durable table completes within 3 minutes with a throughput of 335K rows/sec (vs 7 minutes)! This is 2.3x faster then inserting into a heap table. For the staging of data this definitely a quick win!

SSIS Single Bulk Insert into a Non-Durable table

Traditionally SSIS is the fastest way to load a file quickly into SQL Server because SSIS will handle all the data pre-processing so the SQL Server engine can spend its CPU ticks on persisting the data to disk. Will this still be the case when inserting the data into a non-durable table?

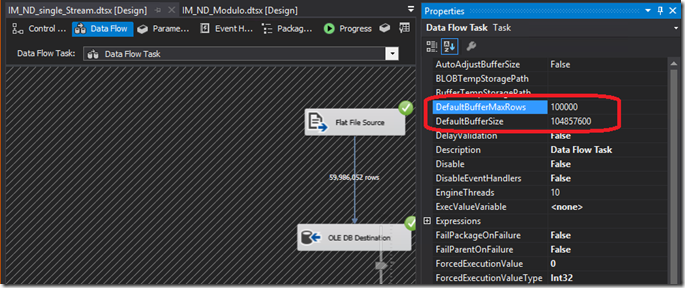

Below a summary of the tests I ran with SSIS for this post: the SSIS Fastparse option and the ‘DefaultBufferMaxRows’ and ‘DefaultBufferSize’ settings are the main performance boosters. Also the Native OLE DB (SQLOLEDB.1) provider performs slightly better than the SQL Native Client (SQLNCLI11.1). When you run SSIS and SQL Server side by side,increasing the network packet size isn’t needed.

Net result: a basic SSIS package that reads a flat file source and writes the data out directly to the Non-Durable table via an OLE DB destination performs similar as the Bulk Insert command into a IMND table: the 60 Million rows are loaded in 2minutes 59seconds or 335K rows/sec, identical to the Bulk insert command.

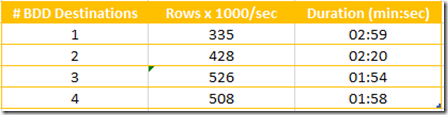

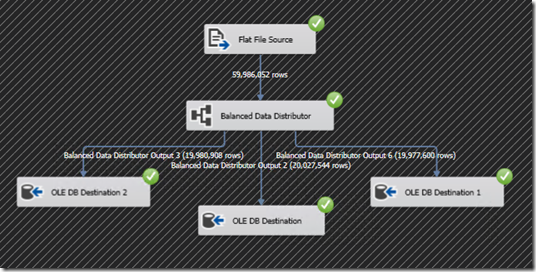

SSIS with Balanced Data Distributor

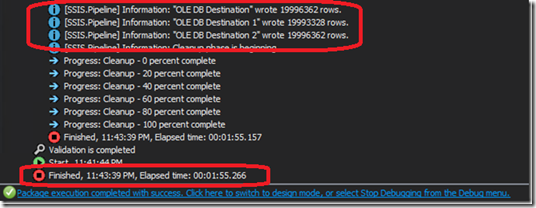

But wait… the in-memory tables are designed to work ‘lock & latch’ free so this means that we can load data also via multiple streams! That is easy to achieve with SSIS; the Balanced Data Distributor will bring just that! (the BDD is listed in the Common section of the SSIS Toolbox) Adding the BDD component and inserting the data into the same Non-durable table with 3 streams provides the best throughput: we are now up to 526000 Rows/sec!

Looking at this very flat line, with only 160% of CPU time used by SQLServer, it seems we are hitting some bottleneck: I quickly tried to be creative by leveraging the modulo function and added 2 more data flows within the package (each processing 1/3 of the data) but it that isn’t improving much (1 min52sec) so a great topic to investigate for a future post!

Wrap-up

The In-Memory Non-Durable table option brings some serious performance improvement for staging of data! loading data 1.5x faster with a regular Bulk insert and up to 3.6x times faster with SSIS. This option, primarily designed to speed up OLTP, can also make a huge difference to shrink your batch window quickly!

(To be continued!)

8 Responses Leave a comment

Hi Henk,

I’m bulk inserting 10 million rows to both a non durable table and a durable table, and I’m getting the exact same speeds for both – any ideas why? Am I doing something wrong?

CREATE TABLE Users_ND (

Id INT NOT NULL IDENTITY(1,1) PRIMARY KEY NONCLUSTERED HASH WITH (BUCKET_COUNT=1000000),

Username VARCHAR(200) NOT NULL

) WITH (MEMORY_OPTIMIZED=ON, DURABILITY = SCHEMA_ONLY);

go

CREATE TABLE Users_Mem (

Id INT NOT NULL IDENTITY(1,1) PRIMARY KEY NONCLUSTERED HASH WITH (BUCKET_COUNT=1000000),

Username VARCHAR(200) NOT NULL

) WITH (MEMORY_OPTIMIZED=ON, DURABILITY = SCHEMA_AND_DATA);

go

BULK INSERT Users_ND

FROM ‘users-huge.csv’

WITH (FIRSTROW = 2, FIELDTERMINATOR = ‘,’, ROWTERMINATOR = ‘\n’, BATCHSIZE = 500000);

BULK INSERT Users_Mem

FROM ‘users-huge.csv’

WITH (FIRSTROW = 2, FIELDTERMINATOR = ‘,’, ROWTERMINATOR = ‘\n’, BATCHSIZE = 500000);

Hi Dan,

do you use SSD/fast storage? this would be the first thing to check via the sql waitstats

If disks are not the bottleneck, Have you tried to load your data via SSIS also?

The server I’m testing on today does not have SSD. I will re-test with a SSD later. However, I thought that the non-durable table would incur zero disk I/O and therefore be significantly faster than the durable table. I’m pretty confused right now.

Hi Dan,

I will email you 2 scripts to snapshot and check your sql server waitstats while loading!

Any chance you can share your original dataset compressed so we can compare benchmarks. Excellent well written article.

in your mail!

Hi !

Between Bulk Insert and Script R, how is better ?

a native SQL Bulk insert is the faster one!